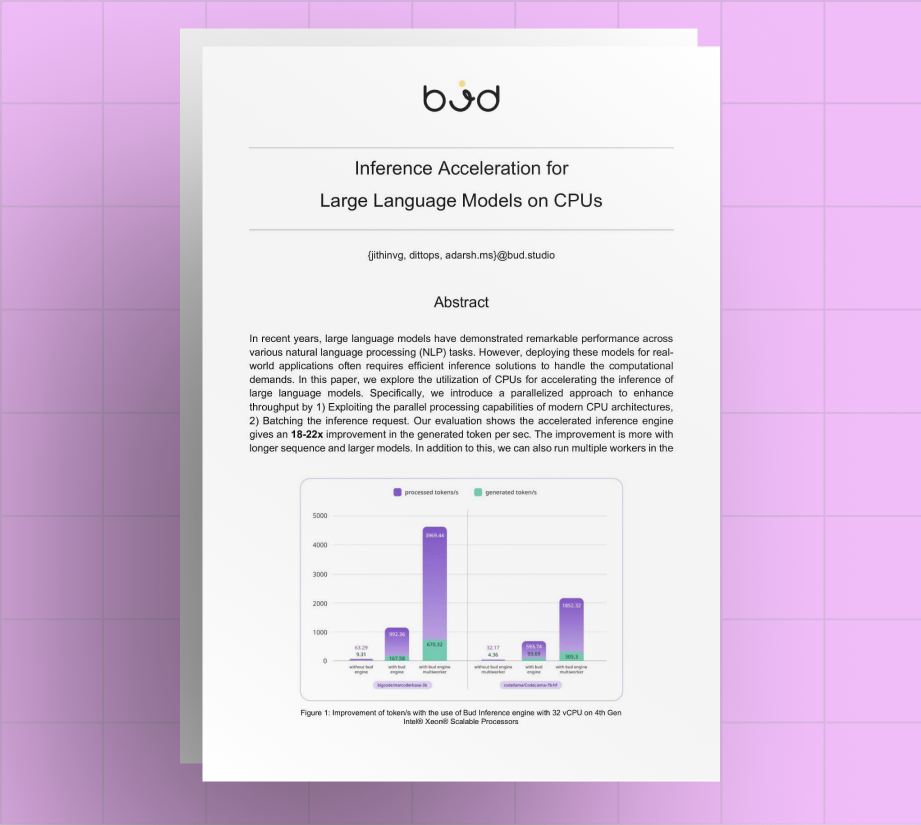

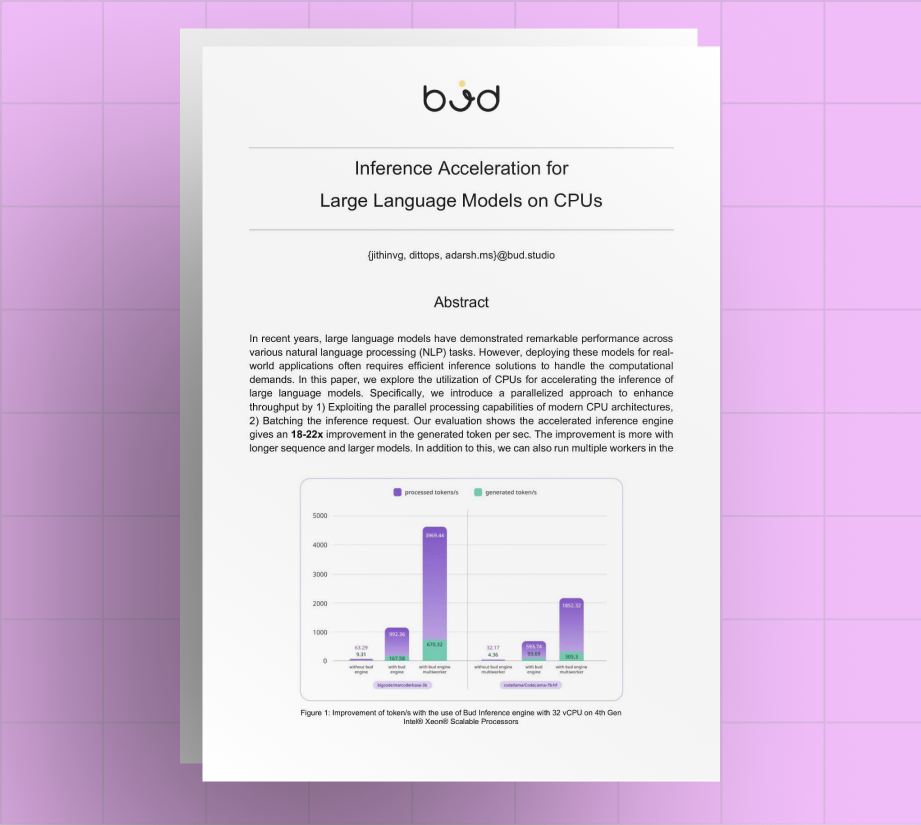

In this paper, we explore the utilization of CPUs for accelerating the inference of large language models.

Read more

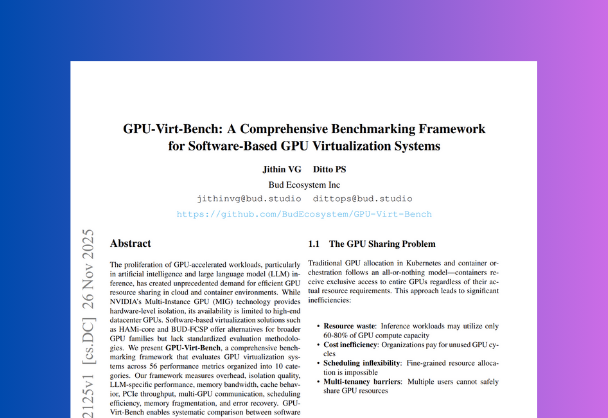

We present GPU-Virt-Bench, a comprehensive benchmarking framework that evaluates GPU virtualization systems across 56 performance metrics organized into 10 categories.

This method not only reduces the traffic to the cloud LLM, thereby lowering costs, but also allows for flexible control over response quality depending on the reward score threshold.

With a composition of 11.53 billion tokens, integrating 8.01 billion tokens of synthetic data with 3.52 billion tokens of rich textbook data, Intellecta is crafted to foster advanced reasoning and comprehensive educational narrative generation.

In this paper, we explore the utilization of CPUs for accelerating the inference of large language models.