Bud AI Foundry & Models ensures affordable and secure private AI at scale.

Contact UsEnterprise AI adoption today is still painfully hard. Blocked by infrastructure sprawl, high costs, scarce talent, and growing regulatory and operational overhead. The result? GenAI remains out of reach for many organizations, and progress gets stuck in pilots instead of outcomes.

Bud changes that by commoditizing GenAI. Turning it from a complex, bespoke engineering effort into an accessible, reliable utility. We’re building an AI Fabric that connects every kind of model, hardware, cloud, and agent architecture, while abstracting away the technical heavy lifting. That means faster deployment, simpler governance, and dramatically more affordable AI—at enterprise scale.

So teams can stop getting distracted by integrations, orchestration, and infrastructure, and start focusing on what actually matters: Measurable Business Impact.

Today, most teams deploy GenAI by stitching together a patchwork of third-party tools and services—many of them outdated, and many built for the previous generation of AI and software.

The result is a brittle system: a messy, fragmented stack that slows teams down and drives costs up. It’s hard to adopt, harder to scale, and often unreliable in production. Turning what should be a competitive advantage into an ongoing integration and maintenance burden.

Everything GenAI in One Platform

A successful, production-ready enterprise GenAI program needs a lot more than just a model: cost and performance optimization, guardrails and safeguards, observability with analytics and reporting, seamless builders for agents and workflows, evaluations and experimentation, RBAC and administration, security and compliance, and the ability to adapt, scale, and deploy with zero configuration. It also requires performant models and agents, practical tools for end users and consumers, and strong FinOps to keep spend predictable and accountable. Bud brings all of this together in one unified platform—so teams can move from pilots to reliable, governed, enterprise-grade GenAI in production.

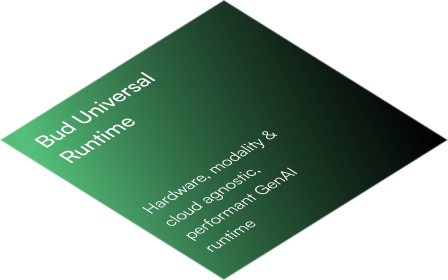

Bud is designed to deliver optimal performance while minimizing total cost of ownership. It enables you to launch GenAI on commodity hardware—whether CPUs or widely available GPUs—and scale seamlessly as your business needs grow. With efficient resource utilization, smart scaling, and hardware-agnostic deployment, Bud optimizes costs while maximizing performance per dollar spent.

Bud makes your infrastructure intelligent—self-optimising and adaptive to workload demands so performance stays high and resources stay right-sized. Bud AI Foundry continuously monitors inference in real time for failures, slowdowns, and anomalies, and when it detects issues, it responds automatically: restarting services, redirecting traffic, or spinning up new instances to maintain uptime and keep production systems stable.

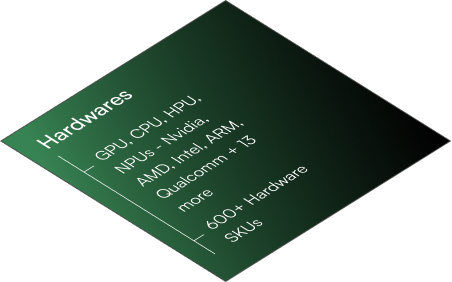

Bud makes GenAI initiatives scalable by removing infrastructure lock-in and automating the hard parts of running production AI. It's hardware- and cloud-agnostic, running across GPUs, CPUs, TPUs, NPUs and other accelerators from multiple vendors, and deploying seamlessly across 12+ clouds, private data centers, or the edge. With zero-config, SLO-aware scaling, Bud automatically scales models, agents, and tools across heterogeneous hardware without manual tuning, while intelligent orchestration schedules workloads across clusters and regions with built-in failover and disaster recovery to keep systems resilient at scale.

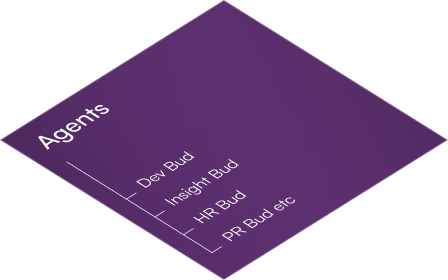

Bud simplifies GenAI by giving you an opinionated, end-to-end platform that abstracts away the technical complexity required to run AI in production. Instead of manually stitching together infrastructure, tuning performance, and managing scaling, Bud automates scaling and optimisations out of the box—so your models and agents stay fast, reliable, and cost-efficient without constant engineering effort. And with Bud Agent, you can manage the entire GenAI lifecycle in natural language: manage deployments, run optimisations, execute evaluations, and handle operational tasks through simple instructions—turning what used to be weeks of DevOps and ML ops work into a guided, streamlined workflow.

Bud is built to run GenAI safely and responsibly from day one, with guardrails and governance baked into the platform—not bolted on later. It supports regulatory and industry compliance needs by design, aligning with major AI and privacy frameworks (including White House and EU guidance, GDPR, and SOC 2–style controls) to help teams operate securely and credibly. Bud SENTRY provides a zero-trust security layer across model downloads, deployments, and inference, while granular access control and RBAC enforce least-privilege permissions for sensitive data and workflows. Continuous audit logging, monitoring, and alerting ensure end-to-end traceability—so you can detect issues early, prove compliance, and keep production AI under control.

Bud enables private AI by bringing the full GenAI stack into your own environment—so your data, models, and workloads never leave your control. With on-premise deployment, encrypted communications, and zero data retention policies, Bud ensures complete data sovereignty while delivering enterprise-grade performance.

Bud enables high-performance GenAI by optimising the full inference path—model, workload, and hardware—automatically. It applies the right mix of parallelism, quantisation, and execution strategies so you get maximum throughput without manual tuning, and routes requests through a high-speed AI gateway built for ultra-low latency (with sub-millisecond gateway overhead for real-time interactions). Bud also uses distributed KV caching to share context across instances, cutting latency and boosting concurrency under heavy workloads. In practice, this translates into major performance gains—up to ~3X higher throughput on NVIDIA GPUs, ~1.5X on other accelerators, and up to 12X faster cold starts—so production systems stay fast, responsive, and cost-efficient as usage scales.

Bud allows you to start small CPU based production ready GenAI infrastructure. So you can find value with GenAI for as low as $500 before you decide to scale.

Once you are ready, you can scale across more CPUs, GPUs, HPUs, NPUs, LPUs from any hardware vendor like Nvidia, Intel, AMD, Arm, Grok, Huawei etc without any code changes or development. You can also scale your infrastructure locally or across 16 different cloud providers that we currently support ensuring the lowest possible scaling up cost without any long term reservations or lockins.

You define your desired outcomes, SLOs, and budgetary constraints; we deliver customized SLMs that meet those criteria and deliver performance on par with state-of-the-art cloud LLMs. Through regular model refreshes, we ensure your solution remains up-to-date and consistently aligned with the latest advances in model intelligence.

What's new? We've got some exciting developments to share!