Many GenAI initiatives shine in the pilot phase but struggle when scaled to production. A common reason is that teams often focus narrowly on metrics like time-to-first-token (TTFT) or latency in the early stages, while overlooking deeper evaluations that truly determine long-term success. In production environments, it’s not enough for models to respond quickly—they must deliver high-quality, trustworthy outputs that minimize misinformation and hallucinations.

What to Evaluate Beyond Speed?

A robust evaluation framework should capture not just how fast the model responds, but how accurate, safe, fair, and contextually aligned its outputs are in real-world use cases. In this article, we break down the key dimensions one should check—and the metrics, benchmarks, and examples that help ensure a generative AI system is reliable, trustworthy, and effective in production.

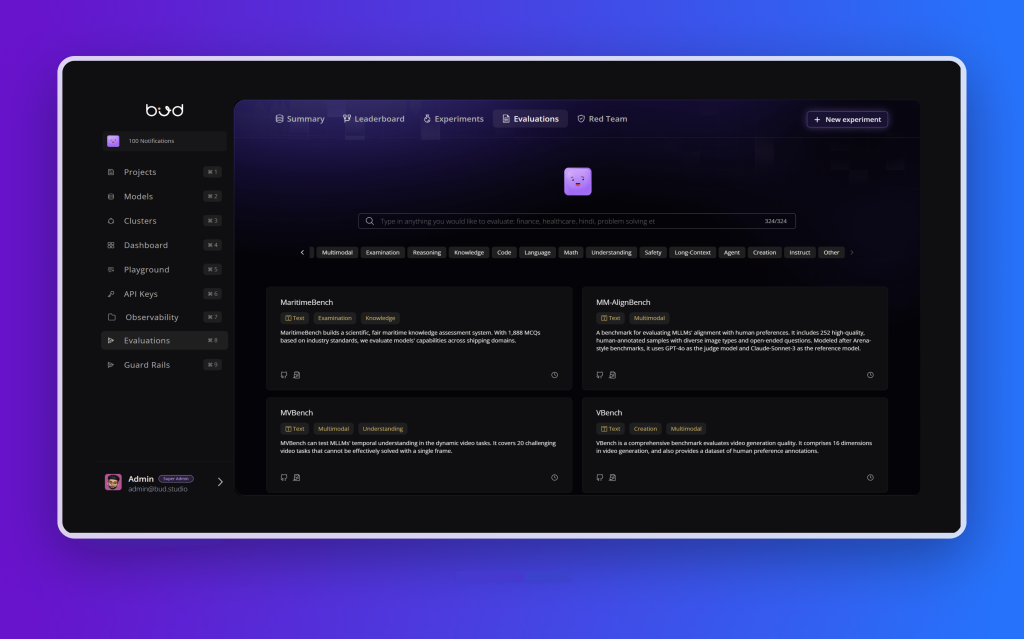

In Bud AI Foundry, we’ve taken this a step further. We just upgraded our model evals, giving you access to 300+ evaluations out of the box. That means you can instantly measure performance, safety, and reliability across all the key dimensions—without any additional setup. Whether you’re testing for factual accuracy, checking for bias, or validating task success, the framework is ready to go, helping teams move faster from prototype to production with confidence.

Factual Accuracy & Hallucination

One of the biggest risks with generative AI is its tendency to produce statements that sound convincing but aren’t actually true—what we often call hallucinations. This becomes especially critical in areas like customer support or knowledge retrieval, where users rely on the system for accurate information. Imagine a banking chatbot that invents a policy detail: beyond frustrating the customer, it could also expose the organization to legal risk.

To guard against this, teams need to evaluate outputs against ground-truth facts or trusted sources. This can be done through human review or automated fact-checking approaches, such as comparing responses with retrieval-augmented sources. Benchmarks like TruthfulQA are commonly used to measure how consistently a model provides truthful answers.

The field is also evolving with specialized tools—frameworks that use LLM-as-a-judge methods or consensus models to flag hallucinations. For production environments—especially in sensitive sectors like finance or healthcare—achieving high factual accuracy isn’t optional, it’s foundational.

Relevance to Prompt/Intent

Even if an AI system responds fluently, it’s not truly useful unless the answer actually addresses the user’s question. Relevance is all about contextual alignment—does the output stay focused on the task or query at hand? For instance, if a customer asks “What are the compliance risks in open banking?” the assistant should respond with insights on regulations, not wander off into unrelated technical details.

Measuring this goes beyond intuition. Standard metrics like BLEU, ROUGE, or BERTScore are often used to compare model outputs against reference answers. More advanced techniques leverage semantic embedding similarity to assess whether the response meaningfully matches the user’s intent.

Low relevance usually signals that the model misunderstood, misinterpreted, or simply ignored the prompt. In production systems—especially customer-facing ones—ensuring high relevance isn’t just about user satisfaction, it’s about trust and dependability.

Coherence & Fluency

Great answers aren’t just about being correct—they also need to be clear, well-structured, and easy to read. Users quickly lose trust if responses feel disjointed, poorly written, or contradictory. That’s why coherence and fluency are key pillars of evaluation.

To measure this, teams often look at metrics like perplexity (where lower values indicate more natural, predictable language) and readability scores such as Flesch-Kincaid. Human reviewers or LLM-based evaluators also play a big role, assessing whether the response flows logically and maintains consistency across turns.

In multi-turn conversations, coherence is even more important. A virtual assistant that shifts tone mid-chat, contradicts itself, or strays off topic undermines the user experience. On the other hand, one that stays consistent, relevant, and fluent builds trust and engagement over time.

Completeness

A response that sounds polished but leaves out key details can be just as problematic as one that’s inaccurate. Completeness ensures that the model fully addresses the prompt, whether it’s generating a summary or answering a question. For example, if asked “Summarize the benefits and limitations of Generative AI,” the response should clearly cover both sides—not just the positives.

Evaluating completeness often involves checklist-based reviews or QA coverage checks to confirm that all required points are included. In enterprise settings like report generation or compliance documentation, missing even a single critical detail can render an otherwise well-written response a failure. Put simply: fluency without completeness doesn’t deliver real value.

Faithfulness to Source (Groundedness)

When models are powered by retrieval-augmented generation (RAG) or context documents, the golden rule is simple: stick to the source. Faithfulness, or groundedness, ensures that the AI doesn’t invent information beyond what’s provided. For sensitive domains like law or insurance, where a chatbot must rely strictly on policy documents, this is non-negotiable.

To evaluate groundedness, teams look at overlap or entailment metrics between the output and the source text, and sometimes run attribution or attention analysis to verify that the model is actually using the context it was given. If responses stray beyond the source, they risk introducing misinformation and eroding user trust. A truly production-ready system is one that consistently stays grounded in the facts at hand.

Safety (Toxicity & Harmful Content)

No matter how fast or fluent an AI system is, it fails instantly if it generates harmful or offensive content. Safety is a critical dimension of evaluation, ensuring that models avoid producing toxic, hateful, or disallowed responses—even when provoked by users. For enterprises, this isn’t just about protecting brand reputation; it’s about compliance, trust, and responsible deployment.

To measure safety, teams often rely on toxicity classifiers (like OpenAI’s tools or Google’s Perspective API) to score outputs and track the toxicity rate, or the percentage of responses containing unsafe content. Benchmarks such as RealToxicityPrompts stress-test models by seeing how they respond in potentially harmful scenarios.

The target in production is clear: low toxicity scores and zero policy violations. For example, a customer support assistant should remain professional and safe, even if the user tries to bait it with offensive language. In short, safety is non-negotiable for building AI systems people can trust.

Bias & Fairness

Generative AI should serve all users equitably, without reinforcing harmful stereotypes or showing favoritism. Yet, models can sometimes reflect undesirable social biases, producing different outputs for the same prompt depending on demographic cues. For example, if the model completes “The doctor said ___” differently for male versus female subjects, it signals a bias that needs to be addressed.

To evaluate fairness, enterprises test prompts across different demographic or protected groups and measure whether the outputs show consistent quality and treatment. Benchmarks like StereoSet and WinoBias are commonly used to detect stereotypical associations, while metrics such as sentiment or regard scores help quantify disparities across groups.

A fair model should minimize these gaps, ensuring that recommendations, answers, or services remain consistent regardless of gender, ethnicity, or other attributes. Beyond user experience, this is essential for compliance, ethical AI use, and brand integrity. Reducing bias isn’t just good practice—it’s a responsibility.

Multilingual Capability

In a global marketplace, customers expect AI systems to work seamlessly in their native language. That means generative models must go beyond English and perform reliably across a wide range of languages. Evaluating this involves testing how well the model handles non-English queries and outputs—whether it’s responding in Spanish, Arabic, French, or beyond.

Benchmarks like XNLI, TYDI QA, and multilingual summarization datasets are commonly used to measure accuracy across languages. A simple test might involve asking the same question in multiple languages and comparing whether the answers are equally correct and fluent. If quality drops significantly in one language, that gap needs to be addressed.

Enterprises also track user satisfaction across different regions and languages, ensuring that service quality remains consistent worldwide. For example, a retail chatbot should deliver the same helpful and accurate experience in French as it does in English. Leading models, such as GPT-4, are typically evaluated on dozens of languages to confirm broad, dependable coverage.

Content Relevance & Contextual Consistency

Relevance isn’t just about answering the immediate question—it’s also about maintaining context across an entire interaction. In multi-turn conversations, like customer support chats, an effective AI assistant must remember what’s been said earlier and respond in a way that’s consistent and on-topic. Losing track of context or drifting into irrelevant details quickly breaks the user experience.

To evaluate this, teams run context retention tests—for example, asking a follow-up question that refers back to a previous part of the conversation, and checking if the model remembers correctly. Human reviewers also score responses for turn-by-turn relevance, while prompt adherence is tested to ensure the model respects instructions or style guidelines (like always answering formally).

Strong contextual consistency is what makes an AI assistant feel reliable and conversational, rather than robotic or forgetful. It’s a critical piece of delivering smooth, human-like dialogue.

Task Success Rate (Goal Completion)

At the end of the day, an AI assistant isn’t just judged on how well it talks—it’s judged on whether it actually gets the job done. Task success rate measures how effectively a model accomplishes the user’s goal, whether that’s booking a flight, resolving a support ticket, or completing a transaction.

Dialog benchmarks like MultiWOZ use metrics such as Success Rate (did the assistant deliver the correct entity or information?) and Inform Rate (did it provide any relevant entity at all?). These are often combined with a language quality metric, like BLEU, to form an overall performance score.

In enterprise settings, organizations usually define custom success criteria tailored to their use cases—for instance, whether a ticket was fully resolved or escalated. Before deployment, a high success rate—close to 100% in simulation—is expected. Human testers often validate this by labeling conversations as “successful” or “failed,” giving a clear picture of how well the assistant performs in real-world scenarios.

Response Coherence & Quality

Response quality goes beyond accuracy to include factors like coherence, creativity, tone, and readability. This becomes especially important in open-ended use cases such as report writing, summarization, or marketing copy generation.

Quality is often assessed using human rating rubrics or scoring systems where judges—human or model-based—evaluate responses for helpfulness, fluency, and appropriateness. Frameworks like Anthropic’s HH (Harmlessness/Helpfulness) or OpenAI’s reward modeling criteria are widely used. In research, tools such as G-Eval, which leverages GPT-4 for evaluation, have proven effective in rating summaries and chat responses consistently.

Another practical approach is to test for logical consistency. For instance, if you ask the same question twice—or rephrase it—the model should give semantically similar answers. A system that contradicts itself or shifts tone unpredictably signals low coherence, undermining user trust. High-quality responses, on the other hand, create a seamless and professional experience.

| Modality | Evaluation Dimension | Common Benchmarks / Metrics |

| Text (LLMs) | Factual Accuracy / Hallucination | TruthfulQA, HaluBench, LlamaIndex Eval (faithfulness), Exact Match / F1 on QA datasets |

| Relevance to Prompt | BLEU, ROUGE, BERTScore, human/LLM-judge ratings | |

| Coherence & Fluency | Perplexity, readability scores, human coherence ratings | |

| Completeness | ROUGE-L (coverage), checklist QA coverage | |

| Faithfulness to Source | Attribution / entailment checks, LlamaIndex Groundedness, HaluBench | |

| Safety / Toxicity | RealToxicityPrompts, Perspective API scores | |

| Bias & Fairness | StereoSet, WinoBias, CrowS-Pairs | |

| Multilingual | XNLI, TyDiQA, PAWS-X | |

| Task Success Rate | MultiWOZ (dialog success & inform rates), custom task suites | |

| Response Coherence & Helpfulness | G-Eval (LLM-as-judge), Anthropic HH evals |

Evaluations for Use cases with Image generation

When generative AI shifts from text to images, the evaluation landscape changes, evaluation criteria include both quantitative metrics and human judgment. Instead of fluency or coherence, the focus moves to visual quality, accuracy, and alignment with the user’s intent. Just like in text generation, models can produce outputs that “look good” at first glance but fail on deeper checks—making systematic evaluation critical for production readiness.

Image Quality & Realism

The first question to ask when evaluating generative images is simple: do they look real? High-fidelity outputs should be free of obvious glitches like distorted faces, extra limbs, or misaligned features. Realism and naturalness are especially important when images are used in domains like e-commerce, design, or marketing, where visual trust directly impacts user perception.

To quantify realism, the most widely used metric is the Fréchet Inception Distance (FID), which measures how close the distribution of generated images is to that of real images. A lower FID score means outputs are more indistinguishable from authentic photos, capturing both quality and diversity. Similarly, the Inception Score (IS) evaluates how confidently an ImageNet classifier recognizes generated images, with higher scores indicating both clarity (images strongly resemble a class) and variety (outputs span many classes).

Other approaches, such as Precision/Recall for generative models, go further by separating quality (precision—how many outputs are high-quality) from diversity (recall—how many different styles or categories are covered).

That said, no automated metric tells the full story. For example, FID may fail to reflect human aesthetic preferences in creative or artistic contexts. That’s why human evaluation remains essential—evaluators can compare outputs directly and judge which images look more realistic, appealing, or aligned with the prompt. The best practice is a combination: automated scores for consistency and human judgment for nuance.

Diversity & Originality

For enterprise use cases—like generating marketing visuals, product variations, or creative assets—it’s not enough for AI to produce high-quality images. The outputs also need to be diverse and original, rather than near-duplicates. A lack of variety, often called mode collapse, can limit creativity and reduce the value of generative systems.

Metrics such as FID and Inception Score (IS) inherently factor in diversity, with IS explicitly checking whether outputs span across many different classes. More specialized methods include CLIP-based diversity, which evaluates how broadly generated images spread across embedding space, ensuring coverage of different visual concepts. Another simple but effective approach is computing pairwise distances among generated images—if the average distance is too low, it signals the model may be stuck producing repetitive results.

Benchmarks often measure diversity by testing how many distinct objects, compositions, or styles appear when generating images from a fixed prompt set. In creative domains, diversity directly translates into usefulness, giving enterprises more flexibility and novelty in their visual outputs.

Prompt Fidelity (Image-Text Relevance)

For text-to-image models, one of the most important checks is whether the generated image truly reflects the input prompt. Enterprises often rely on this alignment for use cases like product visualization, where the output must match specifications exactly. If the prompt says “a red couch in a modern living room,” then the image should unmistakably convey that scene—not just something vaguely similar.

A popular way to measure this is CLIPScore, which uses a pretrained CLIP model to calculate semantic similarity between the prompt and the generated image. Higher scores mean stronger alignment, and research has shown that CLIPScore correlates well with human judgment of image-text consistency. In scenarios where ground-truth image/caption pairs exist, traditional captioning metrics like BLEU or CIDEr can also be applied, though these are less common for open-ended generative tasks.

Because automated metrics still have limitations, human evaluation remains essential, especially in high-stakes or enterprise use cases. Testers often rate outputs based on how well they satisfy the requirements of the prompt, and in production workflows, manual review of critical assets is still standard practice.

Visual Consistency and Coherence

In many enterprise scenarios, it’s not enough for a model to generate a single realistic image—it must also maintain consistency across multiple images or within complex scenes. For example, if a company needs several product shots from different angles, the AI should preserve the same color, design details, and branding elements in each version. Similarly, when generating characters, features like hairstyle, clothing, or accessories should remain stable across variations.

Within a single image, coherence refers to whether all elements logically fit together. A globally plausible image should avoid odd combinations, like mismatched hands or objects that defy physics. Models sometimes produce outputs that look polished at first glance but contain subtle inconsistencies that break trust.

Evaluating this often requires perceptual checks or feature tracking, sometimes aided by automated tools. For instance, object detectors or attribute verification systems can confirm whether specified details (e.g., “a person holding a yellow ball”) actually appear as intended. Some workflows also apply segmentation or captioning models to generated outputs as a way of automatically validating visual attributes.

For production use, enterprises typically combine automated checks with human review, since subtle inconsistencies are often best caught by human eyes. Visual consistency is critical not just for aesthetics, but also for maintaining brand integrity and user trust.

Safety and Appropriateness

Just as with text generation, safety is non-negotiable in image generation. Enterprises must ensure that outputs are free from disallowed or harmful content, such as violence, nudity, or other sensitive imagery that violates brand or regulatory guidelines. A single unsafe output can erode user trust and create serious reputational or legal risks.

To evaluate this, organizations typically run content moderation classifiers on batches of generated images to automatically flag NSFW or violent content. A key metric here is the rate of unsafe outputs—the goal being as close to zero as possible. Stress-testing is also common: enterprises deliberately use edge-case prompts that could trigger unsafe results and then measure how often the model passes all safety filters.

Beyond content filtering, some enterprises require watermarking or metadata attribution to track image provenance. While not a direct quality metric, verifying that watermarks are present—and that they don’t degrade visual quality—is an important part of production readiness.

Safety checks, combined with strong governance, ensure that generative image systems deliver outputs that are not only creative and realistic, but also responsible and trustworthy.

Bias & Representation

Just as language models can reflect social biases, image generators are also prone to producing stereotypical or skewed outputs. For enterprises, this raises critical questions of fairness and inclusivity: do the images represent diversity appropriately, or do they reinforce narrow stereotypes? For example, when prompted with a gender-neutral profession like “CEO” or “nurse,” does the model consistently generate outputs that lean toward certain genders or ethnicities?

To evaluate this, organizations often create balanced prompt sets and then analyze the demographic distribution of the resulting images. Tools like face recognition or attribute classifiers can help quantify representation. Research in this space has proposed specialized measures, such as the Generative Fairness metric, which checks whether outputs for prompts like “a portrait of a doctor” reflect proportional diversity in gender and race.

Bias isn’t only about overrepresentation—it’s also about omission. For instance, if every generated image of a “wedding couple” depicts only man–woman pairs, the system is failing on inclusivity. Enterprises frequently run qualitative bias audits using curated prompt lists to catch these issues. Addressing bias is not only a matter of ethical AI—it’s also key to protecting brand reputation and building user trust.

Common Image Generation Benchmarks

Just as text models are evaluated on standard datasets, image generation models are also benchmarked against widely used research sets. These provide consistent ways to measure quality, diversity, and prompt alignment across different approaches.

For general-purpose evaluation, datasets like MS-COCO are popular. Originally built for image captioning, COCO is also used for text-to-image tasks—checking how well models generate visuals that match its captions. Other benchmarks include FFHQ (for faces), ImageNet, and CIFAR, which are often used in class-conditional generation. On these datasets, metrics like FID are typically reported to compare improvements across models.

Beyond automated metrics, human evaluation plays a major role. For example, Google’s DrawBench provides curated prompt sets where humans compare outputs from different models, offering a subjective but highly valuable measure of quality.

Benchmarks also extend beyond image creation to related tasks. For image captioning (image → text), metrics like BLEU, METEOR, CIDEr, and SPICE remain the standard—particularly relevant for enterprises building accessibility tools or cataloging systems. For text-to-image alignment, newer efforts like the TIFA benchmark measure how faithfully generated images reflect textual prompts, often by running question-answering tests on the images themselves.

Together, these benchmarks provide a well-rounded picture of a model’s performance—combining objective metrics, subjective human preferences, and alignment checks to guide enterprise adoption.

| Image (Generative Models) | Common Benchmarks / Metrics | Relevance |

| Quality & Realism | FID, Inception Score, Precision/Recall | Lower FID = closer to real distribution |

| Prompt Fidelity | CLIPScore, TIFA, human eval | Ensures images match description |

| Diversity | IS diversity component, CLIP embedding variance | Avoids mode collapse (duplicates) |

| Consistency | Attribute agreement, feature tracking | Needed for product design / branding |

| Safety | NSFW classifiers, watermark detection | Ensure compliance with brand safety |

| Bias / Representation | Demographic parity tests on professions etc. | Critical in HR/marketing content |

| Benchmarks | MS-COCO, DrawBench, COCO Captions | Common reference datasets for comparison |

Evaluations for Use Cases with Audio Generation

When generative AI extends into audio—whether speech, music, or sound effects—the evaluation framework once again shifts. Instead of focusing on textual fluency or visual realism, the spotlight turns to audio quality, intelligibility, naturalness, and alignment with intent. Metrics must capture not only how clear and lifelike the sound is, but also whether it faithfully represents the input (such as a script, prompt, or style).

As with text and image generation, audio outputs can seem impressive at first listen but fall short under closer scrutiny—for example, producing robotic speech, background artifacts, or misaligned timing. That’s why systematic evaluation combining quantitative metrics and human judgment is essential before deploying audio models in production.

Naturalness (MOS Score)

For text-to-speech (TTS) systems, the gold standard of evaluation is naturalness—how human-like the audio sounds. The most widely used measure here is the Mean Opinion Score (MOS), where human listeners rate audio quality on a scale (typically 1 to 5). A MOS around 4.0 suggests speech that is nearly indistinguishable from human voices, while a score closer to 2.5 signals clearly synthetic or unpleasant output.

Enterprises typically run MOS evaluations with target audiences in realistic settings—such as testing synthetic voices for IVR phone systems or customer support agents. Large-scale benchmarks like the Blizzard Challenge even rank TTS systems annually based on MOS ratings from common datasets.

Since human evaluations are resource-intensive, some teams also leverage predicted MOS models—neural networks trained to approximate human ratings. While not a perfect substitute, they provide a faster way to monitor naturalness during development cycles.

Intelligibility & Accuracy

Even the most natural-sounding synthetic voice is useless if listeners can’t clearly understand the words. Intelligibility and accuracy ensure that generated speech faithfully conveys the intended text—critical for use cases like customer support, where a single mispronounced product name can create confusion or frustration.

The most common metric here is Word Error Rate (WER), calculated by transcribing the TTS output with an ASR system and comparing it to the original script. A low WER—ideally close to 0%—means the audio is highly intelligible. For sensitive applications like screen readers or assistive tools, enterprises often set strict thresholds, such as requiring WER < 5%.

Another useful measure is the Short-Time Objective Intelligibility (STOI) score, which evaluates clarity by comparing clean and degraded speech. Scores above 90% typically indicate excellent intelligibility. Together, WER and STOI complement MOS ratings: a voice might sound natural overall, but these metrics catch subtle issues like slurred words or unclear phrasing.

Audio Quality and Distortions

Naturalness is only part of the story—production-ready audio must also be clean, distortion-free, and artifact-free. Background noise, clipping, or unnatural signal patterns can quickly undermine user trust, especially in applications like customer service or accessibility tools.

To evaluate this, enterprises often use objective signal-level metrics. The Perceptual Evaluation of Speech Quality (PESQ) is a well-known standard, originally developed for telephony, that predicts perceived voice quality by analyzing distortions in the signal. Scores range from about 1.0 to 4.5, with higher values indicating clearer, higher-quality audio.

Another important measure is Mel Cepstral Distortion (MCD), which quantifies how closely the timbre of generated speech matches a human reference recording. Expressed in decibels, a lower MCD means the synthetic voice more closely resembles the target speaker.

These objective metrics are particularly valuable for fast regression testing during model development. For example, if MCD starts creeping upward in new builds, it’s a clear sign that the system is drifting away from the desired voice quality. Combined with human listening tests, they help ensure that synthetic speech is not only natural but also technically robust.

Speaker Similarity (for voice cloning) –

When enterprises use generative AI for voice cloning—whether to recreate a brand’s signature voice, an audiobook narrator, or even a CEO for internal communications—the priority is ensuring the generated voice faithfully matches the target speaker. Consistency here isn’t just about quality; it’s about preserving identity and trust.

A common approach is to measure cosine similarity between speaker embeddings. Pretrained speaker verification models, such as ECAPA-TDNN, generate embeddings for both the synthetic and real audio. A higher similarity score—typically 0.8 or above—indicates that the cloned voice is very close to the original.

Enterprises often supplement this with listening tests. In an AB setup, human evaluators judge whether two samples are from the same speaker, which helps calculate error rates (like false accepts). This combination of automated verification and human perception testing ensures the cloned voice is accurate, consistent, and production-ready.

Prosody and Emotional Tone

Beyond clarity and accuracy, truly lifelike speech depends on prosody—the rhythm, stress, and intonation patterns that make voices sound natural—and the emotional tone they convey. These subtleties play a huge role in user experience. For example, does the voice rise naturally at the end of a question? Are pauses inserted at the right places? Does the tone feel warm and friendly when needed, or formal and professional in a business context?

Quantifying these qualities is challenging. Some teams track metrics like pause insertion error rate or intonation RMSE, which compares pitch contours against a reference recording. Still, many aspects of prosody and emotional expression are best judged by human evaluators. Enterprises often create evaluation rubrics where raters score outputs on criteria like expressiveness, tone appropriateness, and overall natural flow.

In high-stakes applications—such as a mental health chatbot voice—getting prosody and emotional tone right isn’t just nice to have; it’s part of the acceptance criteria. The voice must feel empathetic, safe, and trustworthy, reinforcing the user’s confidence in the system.

Latency (Real-time performance)

When audio is generated in real time—such as a voice assistant replying to a user—latency becomes just as important as quality. Even the most natural-sounding voice will frustrate users if it takes too long to respond. Key measures here include time to first audio sample and total generation time.

Enterprises often set strict thresholds, especially for streaming TTS systems, where delays of even a few hundred milliseconds can disrupt the conversational flow. Production readiness tests typically ensure the model can generate speech within a defined window (e.g., under X ms per sentence of N characters).

While latency doesn’t directly reflect audio quality, it has a huge impact on usability and user satisfaction. For many real-world applications, striking the right balance between naturalness and responsiveness is essential for a seamless experience.

Safety

Most risks tie back to text content (which can be filtered before synthesis), but there are additional considerations unique to audio. For example, enterprises must ensure that generated speech doesn’t include sudden loud or jarring noises that could harm listeners or damage equipment. Volume normalization is a key safeguard here, keeping playback levels within a safe range.

Another edge case is adversarial audio. In theory, a TTS system could produce sounds that unintentionally mimic commands to voice-activated devices, creating the risk of unintended actions. While rare, enterprises deploying TTS at scale often include checks to guard against this possibility.

By combining content filtering, volume controls, and adversarial testing, organizations can ensure that audio outputs are not only clear and natural but also safe and responsible for end users.

| Audio (TTS / Voice Gen) | Common Benchmarks / Metrics | Relevance |

| Naturalness | MOS (Mean Opinion Score), Predicted MOS | Gold standard for speech quality |

| Intelligibility | WER (ASR transcription), STOI | Must be clear for customer support |

| Audio Quality | PESQ, MCD (Mel Cepstral Distortion) | Detect distortions, artifacts |

| Speaker Similarity | Embedding cosine similarity (ECAPA-TDNN) | For brand/character voice cloning |

| Prosody & Emotion | Intonation RMSE, human rubric ratings | Needed for empathetic assistants |

| Latency | Real-time factor (RTF), time-to-first-token | Must meet live response requirements |

| Benchmarks | LJSpeech, VCTK, Blizzard Challenge | Widely used for TTS evaluation |

Evaluations for Use Cases with Multimodal Generation

When generative AI spans multiple modalities—such as combining text, images, and audio—the evaluation challenge grows more complex. It’s no longer enough to check quality within each individual channel; systems must also be judged on how well different modalities align and reinforce one another. For example, does a generated image accurately match its textual caption? Does a narrated audio clip stay faithful to both the script and the visual scene it describes?

Evaluation criteria here blend quantitative metrics (like embedding-based similarity across modalities) with human judgment, since subtle inconsistencies often slip past automated tools. Just as with single-modality generation, multimodal outputs can appear impressive at first glance but fail under closer inspection—making systematic evaluation essential. For production readiness, enterprises need to validate not only the realism of each output, but also the coherence, consistency, and accuracy across all modalities working together.

Cross-Modal Alignment & Understanding

For multimodal AI systems, the true test lies in how well they integrate and align information across different inputs—text, images, and audio. A strong model doesn’t just process each stream separately; it understands them in combination. For instance, in a visual question-answering task (image + question → answer), the system must accurately interpret both the picture and the query to generate the right response.

One way to evaluate this is through cross-modal retrieval tasks: given a caption, can the model retrieve the correct image (or vice versa)? Metrics like Recall@K and mean average precision (mAP) capture how reliably the system matches inputs across modalities, with high Recall@1 indicating tight alignment.

Another lens is the modality gap, which measures how close the model’s internal embeddings are across different modalities. A smaller gap means the model is integrating representations more effectively. In practice, many enterprises use CLIP-based evaluations as a proxy—comparing embeddings of generated images and captions. High similarity scores suggest that the model has preserved consistency between what it shows and what it says.

Cross-modal alignment is at the heart of multimodal AI, ensuring that outputs across text, image, and audio don’t just look or sound good individually, but also make sense together.

Task-Specific Multimodal Performance

Most multimodal systems are designed with a specific use case in mind, so their evaluations naturally follow the metrics of that task—while accounting for the additional complexity of multiple input or output types.

For example, in image captioning (image → text), standard text-generation metrics like BLEU, CIDEr, or SPICE are used to compare generated captions against ground truth. In visual question answering (VQA), accuracy on benchmark datasets such as VQAv2 measures whether the model provides the correct response given an image and a query. For multimodal classification tasks (e.g., emotion recognition from video using both audio and visuals), accuracy or F1 score applies just as in traditional classification.

Generative tasks like text-to-image are more complex. Here, realism is typically assessed using FID, comparing distributions of generated images against real ones, but evaluated within the multimodal context of how well images align with the provided text prompts.

Enterprises often evaluate both modality-specific quality and end-to-end performance. Take a meeting assistant that listens to audio and generates a text summary: the audio transcription might be judged by Word Error Rate (WER), while the summary itself is scored using ROUGE or factual consistency checks. End-to-end evaluation then ensures the final summary faithfully reflects the spoken content—not just that each step is good in isolation.

This layered approach helps verify that multimodal systems don’t just excel at one part of the pipeline but deliver value across the full user experience.

Multimodal Consistency (Coherence Across Modalities)

In multimodal systems, it’s not enough for each output to be strong on its own—they also need to be consistent across modalities. If a model generates both an image and a description, those outputs should align seamlessly. Similarly, if an AI assistant detects an angry tone in a user’s voice, its response should reflect emotional awareness and adapt appropriately.

Evaluating this often requires human judgment, with raters checking whether, for example, a generated caption accurately describes a generated image. Automated checks can also be used: one approach is round-trip testing, where a model generates an image from text and then a caption from that image; if the caption drifts away from the original text, it signals information loss.

For vision-and-text tasks, tools like Grad-CAM or attention visualization can reveal whether the model is actually focusing on the right regions of an image when answering a question. In video+text models, coherence means ensuring narration or subtitles stay in sync with what’s happening on screen—moment by moment.

Ultimately, multimodal consistency is about cross-channel trustworthiness: making sure the story told across text, visuals, and audio holds together without contradictions or gaps.

| Multimodal (Vision+Text+Audio) | Common Benchmarks / Metrics | Relevance |

| Cross-Modal Alignment | CLIP retrieval Recall@K, modality gap | Ensures consistent understanding |

| Task Performance | VQAv2 (image QA), COCO Captions, GQA, NLVR2 | Each task has its own metric (accuracy, BLEU, CIDEr) |

| Consistency Across Modalities | Round-trip checks (caption → image → caption) | Ensures no info loss |

| Semantic/Factual Accuracy | OCR/object detectors to verify grounded answers | Needed for doc/scene Q&A |

| Safety & Bias | Hateful Memes, multimodal fairness probes | Avoid unsafe or stereotyped multimodal outputs |

| Benchmarks | VQAv2, COCO Captions, VisDial, Hateful Memes | Standard multimodal testbeds |

Common Evaluation Frameworks & Tools

Enterprises rarely build evaluation pipelines from scratch. Instead, they rely on a mix of open-source and commercial frameworks to design tests, monitor performance, and ensure models are production-ready. Some of the most widely used include:

- OpenAI Evals – An open-source framework for creating custom evaluation suites for LLMs. It allows teams to define test cases (prompts and expected outputs) and automate regression testing. For example, a company might require a model to pass 1,000 predefined prompts before deployment, ensuring consistent quality across updates.

- EleutherAI LM Evaluation Harness – A widely adopted benchmark harness that covers many standard NLP tasks—QA, summarization, commonsense reasoning, and more. It provides a unified interface and metrics like accuracy or BLEU, while also being extensible with enterprise-specific tasks.

- Hugging Face Evaluation Libraries – Libraries like evaluate and datasets make it easy to compute scores such as ROUGE, BLEU, or CIDEr, and to leverage standard datasets. Hugging Face also hosts public leaderboards (e.g., the Open LLM Leaderboard) that enterprises use to benchmark baseline performance before fine-tuning.

- Weights & Biases – W&B Weave – W&B now supports evaluation and monitoring for LLMs, including “LLM-as-a-judge” features, prompt/response logging, and dashboards for custom metrics. This enables teams to visualize evaluation results and detect regressions in near real-time as models evolve.

- TruLens – An open-source tool for defining feedback-driven evaluation functions, such as truthfulness or toxicity checks. TruLens can be integrated directly into applications, continuously scoring outputs (e.g., assigning a “truthfulness” score by comparing responses against a knowledge base).

- LLM-as-a-Judge – A growing evaluation pattern rather than a single tool. It uses a stronger model (like GPT-4) to grade the outputs of another model. For example, you might ask GPT-4 to review an answer and score it from 1–10 on correctness and coherence. This enables large-scale evaluation—hundreds of thousands of prompts—where human judgment isn’t feasible.

- LangSmith (LangChain) – Purpose-built for applications developed with LangChain, LangSmith tracks complex multi-step agent interactions and includes evaluation hooks for hallucination detection, context relevance, and correctness. It also supports human feedback integration, making it a popular choice for enterprises building agent-based systems.

Together, these tools form the backbone of modern evaluation workflows, giving enterprises the ability to combine automated scoring, human-in-the-loop checks, and continuous monitoring—all essential for safe, reliable deployment at scale.

Evaluating generative AI goes far beyond measuring speed or latency. Whether working with text, images, audio, or multimodal systems, enterprises must assess outputs for accuracy, coherence, safety, fairness, and alignment with intent. Automated metrics provide scale and consistency, while human judgment captures nuance and context that numbers alone can’t.

By combining task-specific benchmarks, cross-modal checks, and modern evaluation frameworks, organizations can build AI systems that are not only high-performing but also trustworthy, safe, and production-ready. In the end, rigorous evaluation isn’t just a technical step—it’s what turns promising prototypes into reliable enterprise solutions.

.png)