The rapid rise of Generative AI (GenAI) is sparking a new wave of global change, a movement that can only be described as the AI transformation. Much like the digital transformation that preceded it, this shift is forcing organizations to fundamentally rethink how they operate and innovate. As companies embark on their AI transformation with pilot projects and proofs-of-concept (PoCs), cloud platforms offer the speed and flexibility needed to experiment without the upfront investment and complexity of on-premise infrastructure. This has a direct and significant impact on the cloud services market, creating a new challenges and new opportunities.

- An analysis by Goldman Sachs projects that cloud revenues could reach USD 2 trillion by 2030, largely driven by AI rollout. AI / generative AI is forecast to account for about 10-15% of that cloud spending.

- Worldwide spending on AI (inclusive of infrastructure, applications, IT services etc.) is expected to more than double by 2028, reaching ~USD 632 billion.

- McKinsey estimates that by 2030, data centers globally will require something like USD 6.7 trillion in capital expenditures to keep up with compute demand; of that, USD ~5.2T will be for AI-capable processing loads.

- A BCG study projects that a majority of organizations’ IT investments in the near future will tilt toward AI, generative AI, and related cloud services.

Unsurprisingly, a significant portion of this burgeoning market will be captured by the cloud giants: AWS, Azure, and Google Cloud. While their immense scale and market penetration are undeniable advantages, their dominance in the AI era is rooted in something far more strategic than just offering bare-metal infrastructure.

Hyperscalers have invested heavily in a higher-level “AI foundry” layer—a comprehensive suite of tools and services that simplifies the entire AI lifecycle. This specialized layer allows users to move beyond simply renting raw compute power. It provides the frameworks, pre-trained models, and managed services necessary to easily experiment with new ideas, build complex applications, and seamlessly launch and scale them from a proof-of-concept to full production. This approach dramatically lowers the barriers to entry for organizations, enabling them to focus on innovation rather than infrastructure management.

Bare Metal Is Not Enough

For many Tier-2 CSPs, the current offering remains a business of raw infrastructure—providing virtual machines, storage, and networking. While this model has been the foundation of cloud computing, it’s becoming a significant liability in the age of AI. The rapid evolution and inherent complexity of Generative AI means that most organizations lack the technical expertise to effectively navigate and deploy these technologies on a foundational infrastructure layer alone. They don’t want to be burdened with building the entire AI stack from scratch. By not offering an AI Foundry or a comparable integrated platform, these CSPs are missing out on a massive opportunity and facing significant risks.

The Opportunity

- Attracting High-Value AI/ML Workloads: Today’s enterprises, particularly mid-to-large-sized ones, are looking for platforms that streamline the entire AI lifecycle, from easy experimentation to seamless deployment. If a CSP can’t offer this, those customers will simply take their high-margin workloads to hyperscalers like AWS, Azure, and GCP.

- Higher-Margin Services: An AI Foundry is a value-added layer that moves a CSP beyond the low-margin commodity business of IaaS (Infrastructure as a Service). Services like model hosting, agent orchestration, and governance command higher prices and are key to boosting profitability.

- Enhanced Customer Stickiness: When a customer builds their AI workflows on a platform’s tools, model catalogs, and services, they become deeply integrated. This creates significant vendor lock-in, making it much harder for them to switch to a competitor.

- Innovation Leadership: An AI Foundry positions a CSP as a thought leader and a central part of the AI solution stack. This not only attracts partners and developers but also allows the provider to influence the adoption of specific models and technologies, building a stronger ecosystem.

- Additional revenue models: By moving beyond just offering raw infrastructure, CSPs can create new, high-margin revenue streams by monetizing idle hardware. They can offer Model as a Service, Token as a Service, and even higher-value Outcome as a Service, turning their existing compute resources into a new engine for profit. This allows them to escape the commoditized bare-metal business and capture value directly from their customers’ AI innovation.

The Risks of Standing Still

Failing to evolve beyond bare-metal infrastructure poses existential risks for CSPs in a market that is rapidly shifting towards AI-first solutions.

- Loss of Market Share: Many customers are moving to CSPs that provide a full AI stack. Hyperscalers have both the means and the incentive to lock in customers with their comprehensive foundry platforms, potentially squeezing smaller CSPs out of lucrative enterprise contracts.

- Margin Pressure and Commoditization: Without upgrading their offerings, Tier-2 CSPs will remain in a low-margin zone. As the underlying compute, network, and storage become commoditized, their margins will be increasingly pressured unless they move up the value chain with AI/ML services and orchestration tools.

- Higher Customer Churn: As customers’ AI needs mature, they will seek providers with better, more integrated AI tools. Without these capabilities, customers may shift to other cloud providers, leading to a loss of key accounts.

- Brand Perception and Relevance: In today’s market, having credible AI offerings is an expectation. If a CSP is perceived as lagging in AI capabilities, it harms its reputation and makes it less attractive to developers and partners.

Bud AI Foundry for Cloud Service Providers

To navigate this new landscape and capitalize on the immense market opportunity, Cloud Service Providers must evolve their offerings by integrating a robust, enterprise-grade AI foundry layer directly into their infrastructure. This layer must enable them to compete with the AI foundry services provided by hyperscalers like Azure and AWS, making it easy for their customers to build, deploy, and scale AI applications without deep technical expertise. This is where Bud AI Foundry comes in.

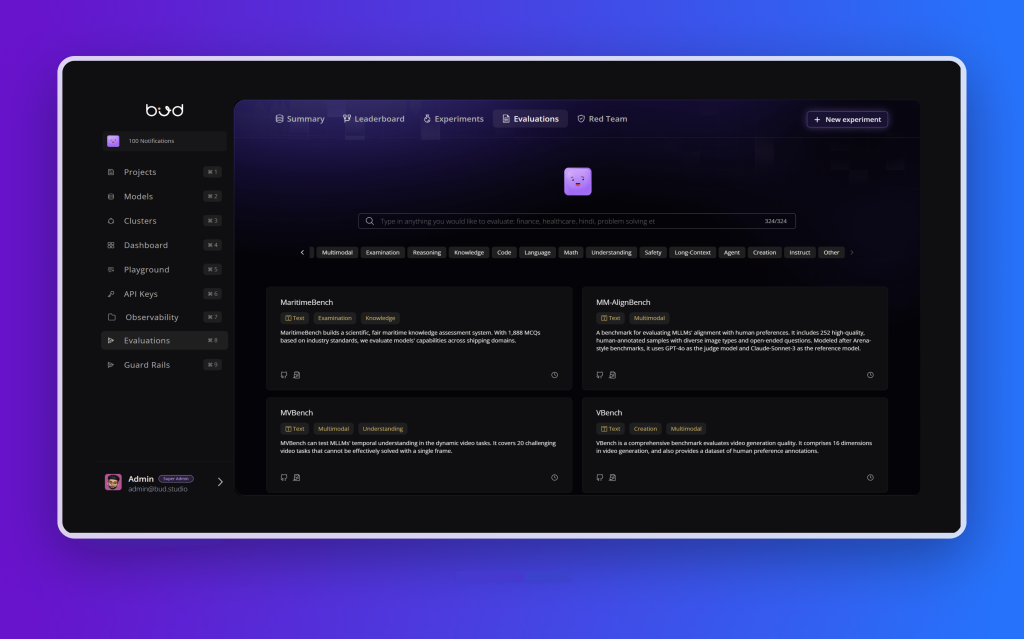

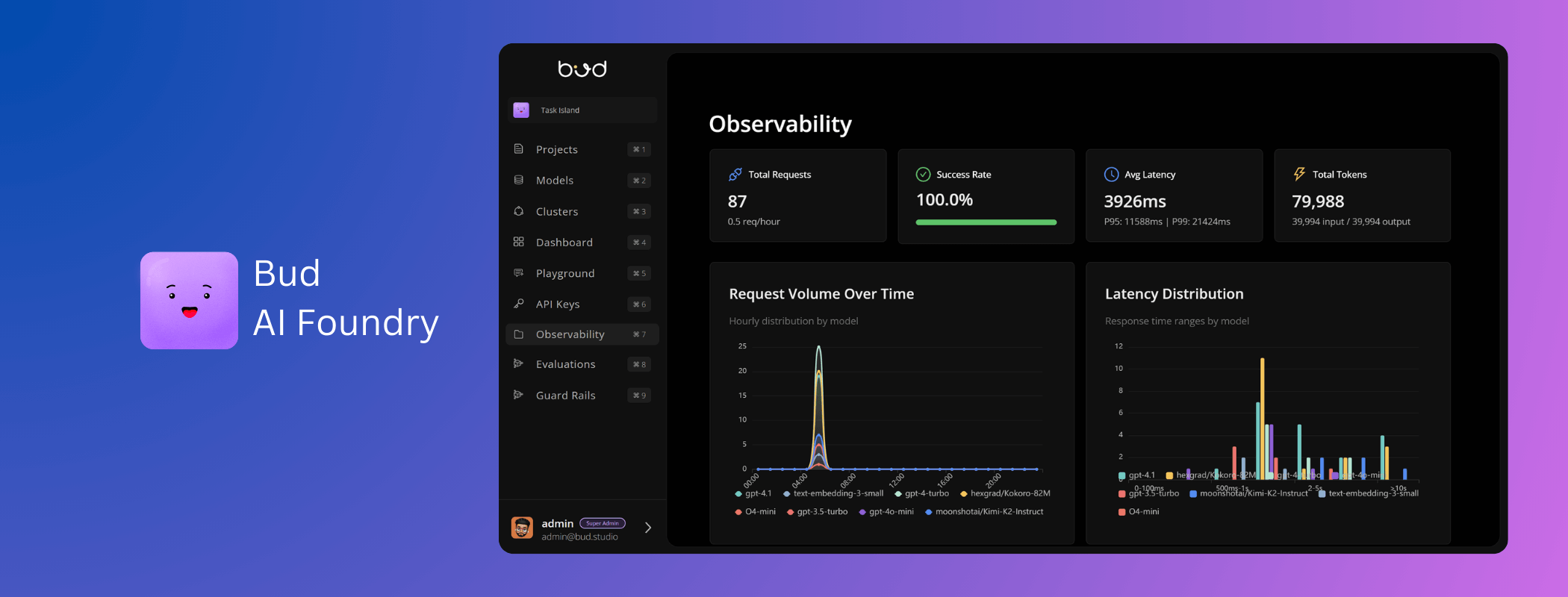

What is Bud AI Foundry?

Bud AI Foundry is a unified, enterprise-grade platform designed to empower developers, enterprises, and even non-technical users to deploy, manage, and consume Generative AI (GenAI) applications at scale. For a Cloud Service Provider, it is the comprehensive stack they can integrate with their existing infrastructure to instantly gain an “AI foundry” capability. It’s a single ecosystem that seamlessly unifies infrastructure, security, observability, and AI tools, transforming a provider’s raw hardware into a dynamic, AI-first service.

The platform is built on a core vision of making every enterprise user an “AI builder” by covering the full GenAI lifecycle—from initial experimentation to large-scale, multi-model deployments. It’s designed to be hardware-agnostic, supporting CPUs, GPUs, and other accelerators across any cloud or on-premise setup. The following are the key components available in Bud AI Foundry;

Bud Runtime: The Universal Inference Engine

The Bud Runtime is a stable and highly performant runtime that serves as the universal engine for all Generative AI models. It is designed to overcome the complexities of deploying AI by providing a single, comprehensive stack that works across different models, architectures, and hardware. With built-in support for frameworks like vLLM, SGLang, and Triton, Bud ensures that models run efficiently and reliably.

This runtime is engineered for unmatched performance, delivering a minimum of a 0.3x to 4x speed improvement across a wide range of hardware, including CPUs, HPUs, AMD MI-XX, and Nvidia’s A/H/V series. This ensures that a cloud service provider (CSP) can maximize the value of its diverse infrastructure.

One of the key strengths of the Bud Runtime is its Universal Compound AI capability. It’s not limited to a single model type; it supports a wide variety of AI models, including Large Language Models (LLMs), Reasoning Models, Speech-to-Text (STT), Text-to-Speech (TTS), image models, and more. This versatility makes it the ideal foundation for any AI service offering.

The runtime also features a powerful Self-Healing mechanism. It continuously monitors for issues, automatically intervenes to fix errors, and can even roll back from production crashes, ensuring near-perfect uptime and reliability.

For CSPs with mixed hardware, Bud’s Heterogeneous Parallelism is a game-changer. It allows you to deploy a single model across a combination of different hardware types—such as CPUs, GPUs, and HPUs—to achieve optimal efficiency and cost-effectiveness.

Finally, the Zero-Config Deployments feature automates the entire setup process. Bud’s internal “stimulator” analyzes the model and hardware to automatically find the most performant and cost-effective settings, ensuring that the end-user’s Service Level Objectives (SLOs) are met without any manual configuration.

Effortless Deployment and Administration

Beyond its powerful core components, Bud AI Foundry simplifies the entire process of managing, deploying, and monitoring AI applications. It provides a comprehensive suite of administration tools that give Cloud Service Providers (CSPs) and their customers a single, unified view of their entire AI ecosystem. This eliminates the need for a patchwork of different platforms, streamlining operations and reducing complexity.

- Integrated Model & Cluster Management: Bud AI Foundry features a built-in Model Registry and robust Cluster Management system. This allows administrators to effortlessly register, version, and manage models and their dependencies. At the same time, they can oversee the compute clusters, ensuring resources are allocated efficiently and are ready for deployment.

- Project and User Management: The platform provides fine-grained Project Management capabilities, enabling teams to organize their work and collaborate effectively. This is paired with comprehensive User Management, allowing administrators to define roles, control access, and onboard new users with ease.

- Built-in Observability and Evaluation: To ensure models are performing as expected, Bud AI Foundry includes a full-featured Deployment Observability suite. This gives real-time insights into model performance, latency, and resource usage. Additionally, a dedicated Evaluations engine helps users benchmark model performance against specific criteria, ensuring the deployed models are meeting business objectives.

- Secure and Collaborative Playground: The Model Playground provides a safe, interactive environment for users to experiment with models and prompts before they are deployed. This sandbox is fully integrated with Guardrails and security protocols, allowing for creative exploration without compromising security.

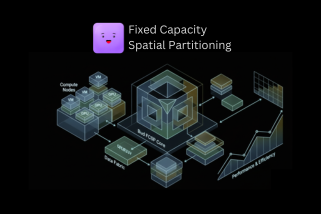

Bud Scaler: Dynamic and Intelligent Scaling for AI

The Bud Scaler is a key component of the Bud AI Foundry, designed to intelligently manage the scaling of all Bud resources—including models, tools, applications, and agents—across your cloud infrastructure. The Scaler makes decisions based on key metrics like cost, Service Level Objectives (SLOs), and performance, ensuring that your resources are always deployed in the most efficient manner possible.

At its core is Auto Routing, an advanced feature that intelligently routes AI workloads based on factors such as accuracy, SLOs, and even geographic location. This ensures better Total Cost of Ownership (TCO) and helps maintain compliance and performance. The Bud Scaler is also completely Cloud & Hardware Agnostic, giving you the flexibility to scale across various cloud providers, regions, and hardware types. This is particularly crucial for mitigating hardware scarcity and ensuring better TCO.

To guarantee reliability, SLO-aware scaling automatically adjusts resources up or down to meet uptime and compliance requirements. Meanwhile, the Scaler’s State-of-the-Art (SOTA) Performance optimizations intelligently deploy system-specific configurations to get the most out of your hardware. This, combined with its Distributed KV Cache Store & Prefill-Decode Disaggregation, maximizes hardware utilization and delivers a higher return on investment.

Bud Sentinel: Your AI Security Suite

The Bud Sentinel is a comprehensive security and compliance suite designed to protect every layer of a Generative AI deployment. It provides robust security for your models, prompts, agents, infrastructure, and clusters, ensuring your AI applications are safe and compliant from end to end.

Built on a Zero Trust security framework, Bud Sentinel protects the entire AI lifecycle, from the moment a model is downloaded to its deployment and every inference thereafter. Its performance is a key differentiator, offering unbeatable guardrail performance with less than 10ms of added latency, even with multiple security layers activated.

To achieve this, it uses multi-layered scanners that combine multiple techniques, including Regex, fuzzy logic, bag-of-words, classifier models, and even advanced LLM-based scanning. The suite comes equipped with over 300 pre-built probes, but also allows you to create your own custom probes using datasets or Bud’s symbolic AI expressions, giving you granular control over your security policies. Looking ahead, Bud Sentinel will support Confidential Computing for Intel, Nvidia, and ARM hardware, providing an even higher level of data and model security.

Bud AI Gateway

The Bud AI Gateway is a high-performance, multi-modal gateway that serves as a single entry point for all your AI workloads. Designed for both private and cloud-based AI models, it provides a robust and efficient way to manage AI traffic, guardrails, agents, and prompts.

Performance is a key differentiator, as the gateway offers unbeatable speed with a P95 latency of less than 1ms for over 10,000 queries per second (QPS). It’s incredibly versatile, supporting more than 200 cloud providers and any on-premise models, giving users complete flexibility. The gateway is also self-evolving, continuously learning from every response and adapting through feedback and test-time scaling to improve its efficiency over time.

With its multi-modality support, it handles a wide range of data types, including text, audio, images, and documents. Furthermore, it can deliver up to a 40% reduction in cost by using a clever, SLO-based service tier lane optimization, ensuring you get the best performance for the lowest possible price.

Bud Studio: Where Everyone Becomes an AI Builder

Bud Studio is the collaborative interface that democratizes AI creation across an entire organization. It’s designed to allow every user, regardless of technical skill, to become an “AI builder” by creating, consuming, and sharing agents, applications, and prompts. This is all powered by Bud’s AI Agent PaaS, which enables users to build AI workflows on their own data.

For enterprises and Cloud Service Providers (CSPs), the Studio offers robust features for control and management. It includes Enterprise RBAC & SSO, providing multi-tiered account access for admins, DevOps, and end-users, ensuring secure and seamless authentication. The platform is perfect for CSPs to provide Model as a Service or Agent as a Service, thanks to its multi-tiered user infrastructure that can be configured as an end-user PaaS or a full-featured studio environment.

Finally, Bud Studio is designed for easy integration with existing systems through its support for OpenStack & CMS. This, combined with its ability to simplify complex deployments into a single, intuitive offering, makes it the ideal platform for building AI-in-a-Box solutions that deliver turnkey AI capabilities to customers.

Bud Agent & Tool Runtime

The Bud Agent & Tool Runtime is a unified, scalable runtime for AI agents, tools, and Multi-Cloud Providers (MCPs). It’s designed to orchestrate and manage these components as independent virtual servers, allowing them to scale separately based on demand.

Built on Dapr, this is an Internet-Scale Agent Runtime with production-ready features and protocol support, ensuring your AI applications are robust and can handle massive traffic. It also includes built-in MCP Orchestration, with a vast library of over 400 MCPs and the ability to handle tool discovery and orchestration.

As a full Agent PaaS, the runtime automatically manages and scales the entire agent infrastructure, including the database and authentication, so you don’t have to. The Agent/Prompt PaaS also comes with Automated D-UI (Artifacts), a user interface that can be customized, consumed, and shared by end users to create their own AI experiences. Finally, every tool and piece of code is protected by Tool Guardrails, which leverage Bud Sentinel or custom rules to ensure a secure and compliant environment.

In a nutshell,

In an era defined by the AI transformation, the role of a Cloud Service Provider is rapidly evolving. Simply offering bare-metal infrastructure is no longer enough to compete with hyperscalers or meet the sophisticated demands of modern enterprises. The future belongs to those who can provide a comprehensive, integrated platform that simplifies the entire AI lifecycle.

By adopting a stack like Bud AI Foundry, CSPs can go Beyond Bare Metal. They can turn their raw infrastructure into a high-value, AI-first service, unlocking new revenue streams, increasing customer stickiness, and establishing themselves as leaders in the AI ecosystem. The ability to offer a full-stack, enterprise-grade AI foundry—from a universal runtime and intelligent scaling to powerful security and a collaborative studio—is no longer a luxury; it’s a strategic imperative. The time to shift from providing just infrastructure to empowering innovation is now.

.png)