Large Language Models (LLMs) are resource-intensive. Open-source models like LLaMA 2, Mistral 7B, Falcon 40B, and others offer flexibility for deployment on cloud, edge, or on-premise setups. However, for cost-effective deployments, inference optimization is a necessity. This report surveys recent inference optimization methods and best practices, focusing on open-source LLMs. We cover techniques to reduce latency, memory footprint, and compute costs while preserving accuracy. First, let’s address the key challenges in deploying LLMs in production;

- Memory & Compute Requirements: A model with tens of billions of parameters can occupy dozens or hundreds of gigabytes of memory. For example, a 70B parameter LLaMA-2 requires ~140 GB in 16-bit precision, needing multiple high-end GPUs. This makes deployment on limited hardware difficult. Even smaller models (7–13B) can strain edge devices in default precision.

- Latency: Autoregressive generation is inherently slow – each token is generated sequentially, and large models have complex computations per token. Without optimizations, users may face substantial delays for long outputs.

- Throughput and Scalability: Serving many concurrent users requires efficient batching and resource utilization. Naively queuing requests leads to latency spikes under load.

- Cost: Cloud GPU instances are expensive. Inefficient inference drives up operational cost. For on-premise, power and hardware costs are significant for continuous LLM workloads.

- Accuracy vs Efficiency Trade-off: Techniques that make models faster/smaller can degrade answer quality. Production deployments must balance optimization with maintaining accuracy and avoiding issues like hallucinations.

Addressing these challenges requires a careful blend of algorithmic, architectural, and systems-level optimizations. As LLMs transition from research prototypes to real-world applications, minimizing resource usage without compromising performance has become a top priority. Effective inference optimization ensures that models remain responsive, scalable, and cost-efficient across diverse deployment environments—whether in the cloud, on the edge, or on-premise. With this context in mind, we now explore a range of techniques that can significantly enhance inference efficiency while preserving the capabilities of open-source LLMs.

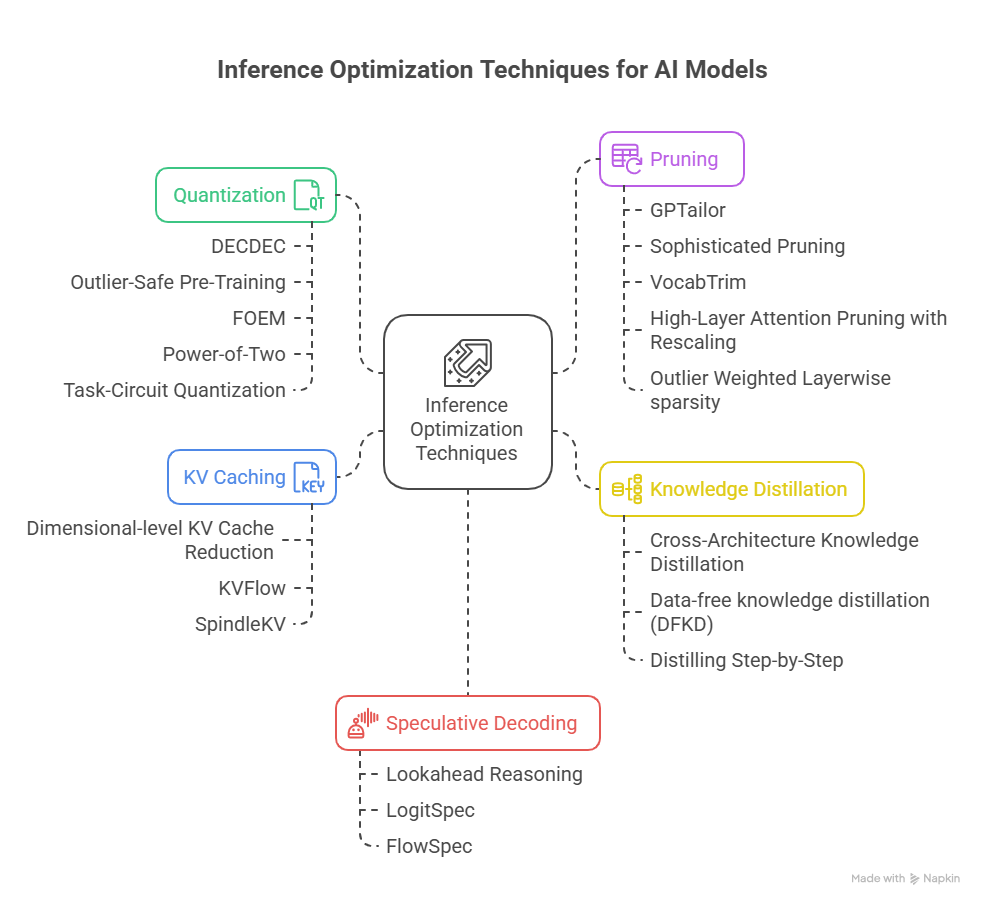

1. Quantization

Quantization reduces the numerical precision of model parameters (and sometimes activations), representing them with fewer bits. For instance, weights originally in 16-bit floating point may be quantized to 8-bit or 4-bit integers. This drastically shrinks model size in memory – a 4-bit model is half the size of an 8-bit model, and one-quarter the size of a 16-bit model. Smaller memory footprint means we can load bigger models on the same hardware or run the same model on lower-tier devices. It also reduces memory bandwidth usage, which often bottlenecks LLM inference.

Benefits:

- Memory and Cost: Quantization can cut memory usage by ~2–4X with minimal accuracy loss. For example, 4-bit quantized LLMs often retain performance comparable to full precision. This enabled the 70B-parameter LLaMA-2 model to be served on a single 48 GB GPU using 4-bit weights (≈35 GB), whereas it previously required multiple GPUs. Smaller memory needs also allow deployments on CPUs or edge devices (e.g. 7B models quantized to 4-bit fit in ~4 GB RAM, small enough for laptops or smartphones).

- Throughput: On memory-bound workloads, lower precision can improve speed by transferring less data per token step. Modern GPUs have specialized INT8/INT4 tensor cores to accelerate low-precision math. Quantized models have been shown to achieve up to ~2.4X single-stream speedups in some cases.

- Scalability: The smaller memory footprint allows serving more model replicas or more concurrent contexts per GPU, improving overall throughput for multi-user services.

Challenges:

- Accuracy Impact: Aggressive quantization can introduce approximation error. While 8-bit often preserves accuracy within ~1% and 4-bit can be nearly lossless, going to 3-bit or 2-bit leads to notable degradation. For example, below 4-bit, models start to suffer significant drops on benchmark performance. Research into outlier handling (e.g. keeping a few large-magnitude weights in higher precision) and quantization-aware training seeks to minimize this loss.

- Speed Trade-offs: Without specialized kernels, quantization may not always yield speed gains. If weights are stored in INT4/INT8 but activations remain FP16, hardware may need to upcast ints to higher precision during compute. This can reduce or negate speed benefits – indeed one study found naive 8-bit weight quantization sometimes slowed inference except for very large models (where memory savings improved caching). Tools like Bud Runtime, NVIDIA’s Transformer Engine and libraries like bitsandbytes provide optimized kernels to fully exploit low-precision math.

- Dynamic Range and Outliers: Transformer activations occasionally contain extreme outlier values that are hard to quantize without clipping. Techniques such as LLM.int8() (which keeps a small fraction of outlier channels in higher precision) and SmoothQuant address this by rescaling or selectively not quantizing certain components. Activation quantization remains an active research area.

There are a few research into 3-bit or even 2-bit quantization algorithms (e.g. GPTQ, SPQR). A comprehensive 2024 evaluation found that 4-bit post-training quantization can maintain performance very close to full precision across a range of tasks. However, 2-bit models saw complete performance collapse with naive methods, whereas more advanced approaches (spreading error or using mixed-precision) achieved moderate results. In practice, 4-bit is emerging as the sweet spot for major open models like LLaMA-2, with 8-bit used when absolute accuracy is required.

DecDEC is a novel inference method designed to improve the quality of low-bit quantized large language models (LLMs), especially in 3-bit and 4-bit precision settings. Its key innovation is storing residuals (the difference between full-precision and quantized weights) on the CPU and dynamically fetching them for only the most important channels, identified by activation outliers during each decoding step. This allows DecDEC to adaptively correct quantization errors without sacrificing the memory and speed benefits of quantization. Applied to a 3-bit LLaMA-3-8B-Instruct model, DecDEC reduced perplexity from 10.15 to 9.12 with minimal GPU memory overhead and only 1.7% inference slowdown.

Outlier-Safe Pre-Training (OSP) is a training methodology designed to eliminate extreme activation outliers in Large Language Models (LLMs), which are a major obstacle to effective quantization and on-device deployment. OSP introduces three innovations: the Muon optimizer (avoids privileged weight updates), Single-Scale RMSNorm (limits channel amplification), and a learnable embedding projection (controls activation magnitude from embeddings). Applied to a 1.4B-parameter model, OSP achieves a 35.7 average score across 10 benchmarks under 4-bit quantization—significantly outperforming a standard Adam-trained model (26.5)—with just 2% training overhead. OSP also reduces outlier-induced activation kurtosis from 1818.56 to 0.04, showing that such outliers are not intrinsic to LLMs but can be avoided through better training design.

FOEM is a new post-training quantization (PTQ) method for large language models (LLMs) that improves upon traditional compensation techniques by accounting for first-order gradient terms, which are often wrongly assumed to be negligible. Unlike standard approaches relying on second-order Taylor expansions, FOEM identifies and corrects accumulated first-order deviations caused by progressive compensation. It estimates gradients efficiently—without costly backpropagation—by using the difference between quantized and full-precision weights, and leverages precomputed Cholesky factors to handle Hessian inverses in real time. Experiments show FOEM significantly outperforms existing methods like GPTQ, reducing perplexity by 89.6% in 3-bit quantization and boosting Llama3-70B’s MMLU accuracy from 51.7% to 74.9%, closely matching full-precision performance.

Power-of-Two (PoT) quantization is introduced as an efficient framework for compressing Large Language Models (LLMs), particularly targeting faster inference and higher accuracy at extremely low precisions (2- and 3-bit formats). While traditional PoT quantization works well on CPUs, it struggles on GPUs due to sign-bit entanglement and sequential bit manipulations. This new framework addresses those limitations and introduces a two-step post-training algorithm: initializing robust quantization scales and refining them with a small calibration set. The method not only outperforms state-of-the-art integer quantization in accuracy at low bit-widths but also speeds up dequantization on GPUs, enabling more efficient floating-point inference.

Task-Circuit Quantization (TaCQ) is a novel mixed-precision post-training quantization (PTQ) method that maintains high model performance in ultra-low-bit settings (2–3 bits) by selectively preserving key weights in higher precision. Inspired by circuit discovery, TaCQ identifies “weight circuits” — small, task-critical sets of weights — and keeps them at 16-bit precision, while quantizing the rest to low-bit formats. It estimates the performance impact of quantization using both weight deviation and gradient-based signals, enabling task-aware precision allocation. TaCQ outperforms state-of-the-art methods across diverse tasks (QA, math reasoning, text-to-SQL), recovering 96% of Llama-3-8B-Instruct’s full-precision MMLU score at only 3.1-bit average precision, and achieving 14.74% better accuracy than SliM-LLM in the 2-bit regime. Notably, TaCQ remains effective even without task-specific conditioning, showing its robustness.

2. Pruning and Sparsity

Pruning removes less important weights or neurons from the model, creating a sparse network that requires less computation. Pruning can be unstructured (drop individual weights) or structured (remove entire neurons, attention heads, or even layers). The latter is easier to accelerate on hardware. For example, structured layer pruning might reduce a 36-layer transformer to 24 layers for faster inference (often combined with some fine-tuning to mitigate accuracy loss).

Modern hardware and libraries increasingly support sparse operations. NVIDIA’s Ampere and newer GPUs implement a 2:4 structured sparsity pattern at the hardware level – meaning two out of every four weight values can be zeroed and the matrix multiply can skip those zeros. By zeroing ~50% of weights (with minimal effect on model quality), one can potentially double throughput using these sparse tensor cores. Research projects like SparseGPT (2023) prune GPT-sized models with minimal accuracy drop by targeting small weight values.

Benefits:

- Latency and Throughput: Removing redundant weights reduces the number of operations per token. Structured pruning that aligns with hardware capabilities yields real speedups. Sparsity can also be combined with quantization for multiplicative gains (smaller and fewer weights).

- Memory: Pruned models have fewer parameters to store. If unstructured, one must use specialized sparse storage formats (which store only nonzero values and indices) to get memory benefits.

- Progressive Model Size Scaling: Pruning allows obtaining a range of model sizes from one trained model. For example, starting from a 15B base, researchers pruned to 8B and then 4B, each time fine-tuning to recover accuracy. This produces a “family” of models suited to different resource budgets.

Challenges:

- Accuracy Loss: Removing parameters can harm model performance, especially if done aggressively. Some form of retraining or knowledge distillation is often needed after pruning to recover lost knowledge. Careful criteria (magnitude of weights, impact on loss) must be used to choose what to prune.

- Complexity: Unstructured sparsity (random zeros) is hard to exploit – many frameworks see little speedup unless sparsity is very high (90%+). Structured pruning (dropping entire components) is more directly beneficial but may have larger accuracy impact per removed unit.

- Diminishing Returns: Many LLMs have a lot of redundancy, but not infinite. Removing 10–20% of weights can often be done with minor impact, but pushing further starts to hurt quality steeply. Thus, pure pruning alone might not yield a small model with high accuracy – it shines when combined with other techniques.

Recent advancements: A noteworthy 2024 approach by NVIDIA combined structured pruning with distillation to produce much smaller LLMs without sacrificing performance. Their work on “Minitron” models pruned and distilled a Llama 15B down to 8B and 4B, and reported improved MMLU benchmark scores (by 16%) compared to training a 4B model from scratch, while matching the performance of other state-of-the-art 7B models. This demonstrates that pruning can yield efficient models that outperform equivalently sized models trained naively, when guided by a larger model’s knowledge. Given hardware trends, research into sparsity-aware transformers (including block-sparse attention patterns, etc.) is accelerating to leverage these opportunities in both cloud GPUs and even on CPUs.

GPTailor introduces a novel model compression strategy for Large Language Models (LLMs) that goes beyond traditional single-model structured pruning by combining and merging layers from multiple fine-tuned variants. The approach formulates model tailoring as a zero-order optimization problem, exploring a search space with three key operations: layer removal, layer selection from different models, and layer merging. This enables the resulting model to retain the strengths of multiple fine-tunes while reducing size. Applied to LLaMA2-13B, the method achieves ~97.3% of original performance while removing ~25% of parameters, outperforming previous state-of-the-art pruning techniques.

Sophisticated Pruning presents a fine-grained pruning strategy for LLM-based recommender systems, aiming to significantly reduce model size while maintaining recommendation quality. Unlike prior methods that mainly address inter-layer redundancy, this approach identifies and exploits intra-layer redundancy within key components like self-attention and MLPs. The proposed three-stage pruning framework progressively reduces parameters from width (intra-layer) to depth (layer-wise), with each stage followed by distillation-based performance restoration. Experiments on three datasets show that the method retains 88% of original model performance while pruning over 95% of non-embedding parameters, demonstrating strong potential for real-world deployment efficiency.

VocabTrim, a training-free technique to enhance the efficiency of drafter-based speculative decoding (SpD) by reducing inference overhead during the drafting stage. In SpD, smaller draft models generate multiple token candidates, which are then verified by a larger target LLM. The authors identify that using the full vocabulary of the target model in the drafter unnecessarily increases computation, especially for large-vocab LLMs. VocabTrim addresses this by restricting the drafter’s LM head to a smaller, high-frequency token subset from the target model’s vocabulary. While this slightly lowers the token acceptance rate, it significantly reduces latency in memory-bound scenarios, achieving a 16% memory-bound speed-up on Llama-3.2-3B-Instruct in the Spec-Bench benchmark—making it especially valuable for edge and resource-constrained deployments.

High-Layer Attention Pruning with Rescaling, presents a novel structured pruning algorithm for large language models (LLMs) that strategically targets attention heads in higher layers, rather than pruning uniformly across all layers. To address changes in token representation magnitudes caused by pruning, the method introduces an adaptive rescaling parameter that recalibrates representation scales post-pruning. Evaluated on multiple LLMs—including LLaMA3.1-8B, Mistral7B, Qwen2-7B, and Gemma2-9B—across 27 datasets and various tasks, the approach consistently outperforms existing pruning methods, with especially strong gains in generation tasks.

Outlier Weighted Layerwise sparsity (OWL), is a pruning method that assigns non-uniform sparsity ratios proportional to each layer’s outlier frequency. This approach better aligns pruning with layer characteristics, leading to more effective model compression. Their empirical evaluation on LLaMA-V1 models demonstrates the advantages of OWL over uniform pruning.

3. Knowledge Distillation

Distillation trains a smaller student model to replicate the behavior of a large teacher model. The teacher (e.g. LLaMA2-70B) generates either logits or probabilities on a training corpus (or synthetic data), and the student (e.g. 7B) is trained to match those outputs (in addition to any ground-truth labels). The intuition is that the teacher’s outputs provide a richer training signal than hard labels alone, effectively transferring knowledge about language and tasks to the smaller model.

For open-source LLMs, distillation is a powerful way to get “near-large-model” performance at lower cost. A famous earlier example is DistilBERT, which compressed a BERT model by 40% while retaining ~97% of its language understanding capability and running ~60% faster. Recent efforts bring similar ideas to LLMs: e.g., Stanford’s Alpaca and Meta’s smaller LLaMA variants can be seen as forms of task-specific distillation (using outputs of text-davinci or larger models to train a 7B model on instruction following).

Benefits:

- Drastic Model Size Reduction: A well-distilled model might achieve performance close to a model several times its size. This is key for edge deployments where only a small model can run. For instance, researchers demonstrated a distilled 1.3B model achieving competitive accuracy with a 6B model on certain reasoning tasks.

- Inference Speed: The student model, being smaller (fewer layers or hidden units), is much faster per token and uses less memory. This translates to lower latency and higher throughput in production.

- Retention of Knowledge: Distillation tends to preserve the teacher model’s strengths (and biases…) in the student. A student may even generalize better in some cases because the teacher’s soft targets convey extra information (like uncertainty) beyond hard labels.

Challenges:

- Imperfect Imitation: A student will rarely reach the full performance of the teacher, especially on tasks requiring the full capacity of the larger model. Some complex reasoning or knowledge might be lost in translation.

- Training Cost: Distillation itself is a training procedure – one must generate potentially massive amounts of teacher data and fine-tune the student model, which is not trivial for very large teachers. It’s cheaper than training from scratch, but still a non-negligible process.

- Domain Shift and Tokenizer Issues: If teacher and student use different tokenizers or architectures, aligning them can be tricky (there is ongoing work on cross-tokenizer distillation). Also, the student model might need additional fine-tuning to perform well on specific downstream tasks or to align with human preferences (e.g. distilled models may still need instruction tuning or RLHF).

Recent researches has focused on task-specific distillation of LLMs. One example is “Distilling Step-by-Step” where a teacher’s chain-of-thought reasoning steps are used to supervise the student, yielding smaller models that better emulate the reasoning of large models. Another advanced scenario is mixed-distillation: using multiple teachers or combining labeled and unlabeled (teacher-generated) data to train a student – this has shown to improve reasoning ability of smaller models. Perhaps most impressively, as mentioned earlier, NVIDIA’s research showed that combining structured pruning with knowledge distillation can produce tiny models (4B) that still perform very well. This opens the door to on-prem deployments where a 4B model (taking <10 GB memory) might replace a 7B–13B model with only minor quality trade-offs.

Cross-Architecture Knowledge Distillation develops a lightweight, edge-deployable retinal disease classifier designed for resource-limited settings, such as IoT devices like the NVIDIA Jetson Nano. A large vision transformer (ViT) teacher model, pretrained with I-JEPA self-supervised learning, classifies fundus images into four categories: Normal, Diabetic Retinopathy, Glaucoma, and Cataract. Using a novel cross-architecture knowledge distillation framework—including Partitioned Cross-Attention and Group-Wise Linear projectors plus multi-view robust training—the large ViT is compressed into an efficient CNN-based student model. Despite having 97.4% fewer parameters, the student retains about 93% of the teacher’s diagnostic accuracy, achieving 89% classification accuracy, demonstrating effective compression without sacrificing clinical performance. This approach offers a scalable AI solution for early retinal disease detection in under-resourced environments.

Data-free knowledge distillation (DFKD), where the teacher model is non-transferable learning (NTL)—meaning it resists transferring knowledge from in-distribution (ID) to out-of-distribution (OOD) domains. The authors reveal that NTL teachers mislead DFKD by shifting the generator’s focus toward misleading OOD knowledge, hindering effective ID knowledge transfer. To address this, they propose Adversarial Trap Escaping (ATEsc), which separates synthetic samples based on their adversarial robustness: fragile samples are treated as ID-like for standard distillation, while robust samples are viewed as OOD-like and used to actively forget OOD knowledge. Experiments show that ATEsc significantly improves DFKD performance against NTL teachers, enhancing robustness and knowledge transfer without access to real data.

4. Efficient Architectures and Transformer Variants

Beyond compressing existing models, another angle is to design or fine-tune the model architecture itself for efficiency:

- Efficient Attention Mechanisms: The self-attention in transformers is a primary compute and memory hog, especially for long sequences. Techniques like FlashAttention reorder computations and use tiling to reduce memory usage and avoid unnecessary operations, enabling longer context windows with less overhead. Many 2023+ open models (e.g. Mistral, LLaMA-2) integrate such optimized kernels to handle 8K or more tokens with manageable speed. In fact, Mistral 7B uses grouped-query attention (a mix of multi-head and multi-query attention) and a sliding window approach to achieve an 8,000-token context length at low latency. This allows it to outperform larger models like LLaMA-2 13B on tasks, thanks to both training and these efficiency tweaks. Another approach is block-sparse or sliding window attention (as in Mistral) which limits full attention to a fixed window and uses previous layers to carry longer-range info.

- Layer Reduction / Shallow Decoders: Reducing the number of transformer layers decreases computation. Some production systems use smaller decoder stacks or even early exiting (see Section 1.7) to avoid running all layers for every token. As an example, OpenAI’s GPT-3.5 Turbo was rumored to use fewer layers than GPT-3 for speed (while compensating with other training strategies), though exact details are proprietary.

- Parameter Sharing: Some models share weights across layers (as done in ALBERT for BERT models) to reduce size. This is less common in large LLMs but is a potential area to explore for smaller footprint.

- Mixture-of-Experts (MoE): MoE models have multiple expert sub-networks, and a gating mechanism activates only a few for each input. This way, the parameter count can be huge, but each token only uses a fraction of them, reducing computation per token. While MoEs (like Switch Transformers) were explored by Google in 2021, open MoE LLMs are not yet mainstream. However, they represent a possible path to scale without linear increase in compute.

- Optimized Numerics: Aside from quantization, using newer numeric formats like FP8 (8-bit floating point) can give a blend of range and precision. NVIDIA’s Hopper GPUs introduced FP8 support, and some LLM inference pipelines leverage FP8 for activations to save memory (with minimal quality loss).

Benefits: Architectural optimizations can yield intrinsic efficiency gains without needing external compression steps. For instance, FlashAttention can provide up to 8× faster attention computation on long sequences, directly speeding up inference for large context. Similarly, Mistral 7B’s design trades a bit more memory (due to long context) for significantly better throughput and performance-per-parameter. These improvements often come with no loss in accuracy – they are purely making the model or its computations more efficient.

Challenges: Modifying architecture usually requires training or fine-tuning the model in that form – you can’t easily retrofit a different attention mechanism without some retraining. There’s also a validation burden: ensuring that an optimized kernel (like FlashAttention or a custom CUDA kernel) is stable and correctly integrated. Open-source projects like xFormers and DeepSpeed provide many of these building blocks (e.g., FlashAttention, optimized layer norm, etc.) that can be toggled on during model loading or fine-tuning.

5. Key-Value Caching and Memory Management

KV caching is an essential optimization built into how Transformers are used at inference time. As the model generates tokens, each transformer layer produces Key and Value matrices for each new token which are used in attention for future tokens. Instead of recomputing all past keys/values from scratch for each step, the model caches them in memory and appends new ones each iteration. This cache prevents redundant computation and is critical for performance when generating long sequences. All modern LLM libraries use KV caching by default.

However, the KV cache itself consumes a lot of memory – often comparable to the model weights for long prompts. For example, the cache for a single 2048-token sequence on LLaMA-13B can take 1.7 GB of GPU memory. If multiple sequences are processed in parallel (batching), each needs its own cache, which grows with sequence length. This variable, growing memory usage can cause fragmentation and GPU memory pressure. A study found that traditional frameworks waste 60–80% of memory due to fixed-size allocations for KV caches that aren’t fully used.

Memory optimization techniques address this issue:

- PagedAttention: Introduced by vLLM (2023), PagedAttention treats the KV cache like a virtual memory system. It breaks the cache into fixed-size blocks (pages) and allows those blocks to be non-contiguous in physical memory. A page table maps each sequence’s logical token positions to these blocks. This nearly eliminates fragmentation – only the last block of each sequence may have some slack, resulting in <4% memory waste. The result is that far more of GPU memory can be utilized for actual data, allowing larger batch sizes or longer contexts on the same hardware.

- KV Cache Compression: Some research explores quantizing or compressing the KV tensors themselves (since they are floating-point). For instance, 8-bit or 4-bit KV cache storage can cut cache memory in half or quarter at the cost of some precision. This is an emerging idea to extend context length without linear memory growth.

- Memory Offloading: In constrained environments, one can offload parts of the KV cache to CPU RAM or even disk (with a speed penalty) when the full context isn’t needed immediately. Some frameworks allow spilling out older parts of the cache for very long conversations, re-loading them if needed.

- Shared Contexts: In use-cases like retrieval-augmented generation (RAG) with a fixed prompt context (e.g., same knowledge context for many questions), the KV cache for the prompt can be computed once and reused across queries. Techniques like PagedAttention facilitate this by allowing multiple sequences to point to the same underlying KV blocks for a given prefix.

Benefits: Better memory management via caching means longer prompts and histories can be handled without running out of VRAM. It also boosts throughput by enabling larger batch sizes – if memory isn’t wasted, we can pack more sequences onto the GPU. The vLLM system showed that by eliminating KV cache fragmentation and doing smarter batching, one can achieve up to 24× higher throughput than naive HuggingFace Transformers serving, and ~3.5× higher than even optimized HF Text Generation Inference (TGI) server on the same hardware.

Challenges: More complex cache management adds engineering complexity and slight runtime overhead (e.g., an indirection for page tables). But the benefits far outweigh these for large-scale serving. Another consideration is cache invalidation – in multi-turn conversations, if the model’s state (cache) is reused between turns to preserve conversational context, one must carefully manage that memory and ensure old caches are cleared when a new session begins.

Dimensional-level KV Cache Reduction addresses the efficiency bottleneck caused by the growing Key-Value (KV) cache in Transformer Decoder-based large language models (LLMs) during inference. The authors propose KV-Latent, a method that down-samples KV vectors into a lower-dimensional latent space, significantly reducing memory usage and improving inference speed with minimal extra training (under 1% of pre-training). They also improve the stability of Rotary Positional Embeddings on these compressed vectors by adjusting the frequency sampling to reduce noise. Experiments demonstrate the effectiveness of KV-Latent across various model architectures, and the study explores the separate impacts of compressing Keys versus Values. This approach enables more efficient LLM systems with reduced KV cache footprint, facilitating faster and lighter deployments.

KVFlow, a workflow-aware key-value (KV) cache management system designed to improve efficiency in large language model (LLM) agentic workflows. Unlike existing systems that use a Least Recently Used (LRU) eviction policy, KVFlow models agent execution as an Agent Step Graph and predicts each agent’s steps-to-execution to better preserve KV caches likely to be reused. It applies a fine-grained eviction policy at the KV node level and efficiently manages shared prefixes in tree-structured caches. Additionally, KVFlow features overlapped KV prefetching, loading tensors in the background before they are needed, which reduces stalls. Experiments show KVFlow achieves up to 1.83× speedup for single large-prompt workflows and 2.19× speedup when handling many concurrent workflows, significantly outperforming prior cache management methods.

SpindleKV introduces a novel method for reducing the memory footprint of Key-Value (KV) caches in large language model (LLM) inference. Unlike prior approaches that mainly focus on evicting redundant KV cache entries in deeper layers, SpindleKV balances reduction across both shallow and deep layers. It uses an attention weight-based eviction strategy for deep layers and a codebook-based replacement learned via similarity and merging policies for shallow layers. SpindleKV also overcomes challenges related to Grouped-Query Attention (GQA) that hinder other eviction methods. Experiments on multiple benchmarks and LLMs show that SpindleKV achieves better KV cache reduction while maintaining or improving model performance compared to baseline methods.

6. Batching and Concurrent Inference

Batching is the practice of processing multiple requests together to better utilize hardware parallelism. Large transformers run much more efficiently on GPUs when they process a batch of inputs, rather than single input at a time, due to how GPU cores vectorize operations. Batching amortizes the cost of loading model weights into GPU registers across multiple sequences, and keeps the GPU’s matrix units busy.

However, straightforward (static) batching has limits in the context of LLMs:

- Different requests often want to generate different lengths of output. In a static batch, all sequences must wait until the longest finishes, causing bubbles where some threads are idle. This is known as the “tail latency” problem in batching.

- If traffic is low or queries arrive unpredictably, you may not always have enough requests to form a large batch without introducing delay.

Dynamic batching (in-flight batching): Modern inference servers use techniques to continuously batch and intermix incoming requests on the fly. For example, vLLM’s continuous batching will add new requests to an ongoing batch as soon as another sequence in that batch finishes, so the GPU is never sitting idle waiting for an entire batch cycle to complete. This is akin to a conveyor belt rather than discrete batches – the server dynamically adjusts which sequences are grouped together at each decoding step. It mitigates the “longest sequence” problem by not binding sequences together for longer than necessary.

Multi-model batching: In on-prem or cloud scenarios, one might also batch across models (e.g., run two different 7B models on the same GPU in a batch, if they are small) – though this is less common, some serving stacks allow time-multiplexing or parallel execution of multiple models for efficiency (especially if using smaller models in ensembles, see Section 3).

Concurrency patterns: Another related optimization is asynchronous generation – allowing one thread to continue the decode loop for a given request while others start new requests, which improves overall throughput and latency distribution.

Benefits:

- Higher Throughput: Batching is the simplest way to get more tokens per second out of a GPU. Utilizing techniques like continuous batching can improve throughput by several times (vLLM reported 3–4× throughput vs non-batching, and 1.3–1.5× over static batching approaches in TGI).

- Lower Cost per Query: By serving multiple queries at once, you amortize the energy and time. For cloud providers, this means fewer GPU instances are needed to handle the same QPS (queries per second).

- Managing Variability: Dynamic batching smooths out the latency for shorter requests (they no longer get stuck behind extremely long generations). It also tends to naturally prioritize urgent workloads – if a fast query comes in, it can often be executed in parallel rather than waiting.

Challenges:

- Complex Implementation: In-flight batching and similar optimizations add complexity to the server. Keeping track of many sequences at different stages and ensuring the correctness of output requires careful engineering.

- Latency vs Throughput Trade-off: Batching by nature adds a bit of latency (you might wait a few milliseconds to accumulate a batch). For interactive applications requiring ultra-low latency for single queries, you might use batch size 1. Production systems often set a small time window (e.g. 5–20ms) to gather batchable requests – this adds negligible latency but hugely boosts throughput. Tuning this is important per use-case.

- Memory Limits: Larger batches consume more memory for activations and KV cache. There’s an upper bound where a batch can exhaust GPU memory. Sophisticated batching systems might dynamically adjust batch size based on available memory or sequence lengths.

Aside from vLLM’s approach, companies like OpenAI and Google have internal scheduling systems to maximize GPU utilization. In open source, Text Generation Inference (TGI) by Hugging Face (written in Rust) introduced features like priority batching (differentiating between realtime vs batch jobs). We also see research on optimally cutting beams in beam search and sorting sequences by length to reduce padding waste. Continuous batching remains a cutting-edge feature that open solutions like vLLM have pioneered, and it’s becoming more standard in 2024-era LLM servers.

7. Speculative Decoding (Accelerated Autoregression)

Speculative decoding is an algorithmic technique to accelerate the autoregressive decoding phase by generating multiple tokens in parallel, using a guiding “draft” model. Normally, an LLM must generate tokens one-by-one, since each token depends on the previously generated token’s state. Speculative decoding breaks this limitation by employing a second, faster model to predict a chunk of tokens, and then having the main model validate those tokens in one go.

A common setup: let A (assistant) be a small, fast model and B (base) be the large, accurate model. We want B’s output but faster. The process:

- Run model A for, say, k steps autoregressively to generate k tokens “speculatively” (very quickly since A is small).

- Then feed those k tokens into model B in a single batch to see what B would have produced next.

- If B’s output matches all k speculative tokens, we accept them (great, we just skipped k single-step iterations of B). If B diverges at some point (say at token j < k), we accept the tokens up to j-1, then have B continue from there (or invoke A again to propose new tokens).

This way, large model B runs far fewer steps (it can “jump ahead” using A’s guesses). The key is that A’s guesses need to be fairly good so that B accepts a high percentage of them, otherwise you waste time computing drafts that get thrown out. Typically, A is tuned to be more optimistic (e.g., sampling from a higher temperature or fine-tuned to mimic B’s distribution) to improve acceptance rate.

Speedups: If done well, speculative decoding can yield 2–3× faster generation without degrading output quality. Google’s 2023 research (called “Speculative Sampling” or “Assisted Decoding”) demonstrated around 2× speedup on GPT-3 class models using a 1.3B draft model for a 6B main model, for instance. More recently, Apple’s 2024 Speculative Streaming (which fuses draft and verifier into one model via a special fine-tuning objective) reported 1.8× to 3.1× speedups on tasks like summarization, with no quality loss. These numbers are significant given they effectively multiply throughput without additional GPUs.

Benefits:

- Lower Latency: Speculative decoding directly reduces the number of serial steps needed, so responses finish sooner.

- Scalable Efficiency: It’s complementary to other optimizations – you can use a quantized smaller model A to draft for a large model B. The method doesn’t require hardware changes, just clever use of existing models.

- No Model Quality Trade-off: In theory, if B ultimately verifies everything, the final output is exactly what B would have produced on its own (just faster). So you don’t sacrifice accuracy/honesty of the large model as you would if you simply downsized it. Even if some draft tokens are rejected, B will overwrite them, maintaining correctness.

Challenges:

- Auxiliary Model Training: You need a suitable draft model. If an appropriate small model doesn’t exist, one might train or fine-tune a model to be a “speedy predictor” for the large model. This is an extra step. (Apple’s new approach avoids a separate model by multi-task training the main model, which is promising for simplicity).

- Complex Implementation: The generate-verify loop is more complex to implement and not yet widely available in off-the-shelf libraries (though there are proofs-of-concept).

- Limited by Draft Quality: If the small model A is too weak and its guesses are often wrong, B will reject tokens frequently, reducing or negating the speed benefit. In worst case, it could even slow things down (if B is constantly rerunning steps). Thus, the draft model needs to have a reasonably high precision in predicting B’s outputs. This often implies the small model was trained or fine-tuned specifically to approximate the larger model’s next-token predictions.

- Less Benefit on Short Outputs: If you’re only generating a handful of tokens (e.g., a short answer), the overhead of speculative decoding setup might not be worth it. It shines for longer generated texts.

Recent advancements: Aside from Apple’s single-model speculative decoding, there’s work on adaptive speculative lengths and even chaining multiple draft models. Another approach called “early-and-refine” (related to early exit, next section) uses the model’s own lower layers to draft and higher layers to verify, instead of an external model. This can be seen as an internal speculative decoding. Microsoft researchers also introduced Speculative Beam Search and Speculative Sampling with RNN draft models (Recurrent Drafting, to generate many tokens quickly) – pushing acceptance rates higher. These are cutting-edge (late 2024) developments, but not yet mainstream in open deployments. We can expect speculative decoding to become more accessible via libraries in 2025, especially as open-source LLM ecosystems incorporate these research ideas.

Speculative Decoding with Lookahead Reasoning improves the speed of decoding long chain-of-thought reasoning in language models by introducing Lookahead Reasoning, which adds a second layer of parallelism at the step level. Unlike traditional token-level speculative decoding, this method allows multiple future reasoning steps to be proposed and verified in batches, only regenerating incorrect steps. This approach boosts speedup from about 1.4x to 2.1x on benchmarks like GSM8K and AIME, without losing answer quality. It also scales better with increased GPU throughput.

LogitSpec, a method to improve retrieval-based speculative decoding for accelerating large language model inference. Unlike prior retrieval-based SD methods that struggle to find accurate draft tokens, LogitSpec expands the retrieval range by using the model’s last token logit to predict not only the next token but also the token after that. This two-step process—speculating the next-next token and retrieving references for both tokens—boosts draft token accuracy without any additional training. Experiments show LogitSpec achieves up to 2.61x speedup and 3.28 mean accepted tokens per step, and it can be easily integrated into existing inference frameworks.

FlowSpec, a pipeline-parallel, tree-based speculative decoding framework designed to improve distributed inference of large language models (LLMs) at the network edge. FlowSpec addresses the low utilization of pipeline parallelism when inference requests are sparse by combining three key strategies: score-based step-wise verification to prioritize important draft tokens, efficient draft management to prune invalid tokens while preserving causality, and dynamic draft expansion to generate high-quality speculative inputs. Experiments on a real-world testbed show that FlowSpec significantly boosts inference speed, achieving speedup ratios around 1.28 across various models and setups.

Other Emerging Techniques

Finally, a grab-bag of emerging optimization methods worth noting:

- Early-Exit Models: These are transformers trained with the ability to exit generation early if sufficient confidence is reached at a lower layer. Essentially, at each transformer layer, an auxiliary classifier predicts the next token; if a lower layer is “sure” enough, the model can skip the remaining layers for that token. Meta AI’s LayerSkip (2024) implemented this by training LLaMA models with layer dropout and an early-exit loss that supervises intermediate layers to predict tokens. At inference, the model tries to emit tokens using, say, layer 20 instead of layer 40, and only falls back to deeper layers when needed. Combined with a self-speculative mechanism (verifying the early exit with later layers, similar to draft & verify), LayerSkip achieved 1.3× to 2.1× speedups on generation tasks with minimal quality loss. Early-exit is conceptually similar to a student model embedded within the larger model – it dynamically uses “just enough” of the network for each token, saving compute.

- Ensembles and Cascades: Using multiple models in clever ways can optimize inference. A cascade might use a cheap model to handle easy queries and only send hard queries to a big model, reducing average latency/cost. For example, you could deploy a 7B model alongside a 70B model; if the 7B is confident in an answer (above some score), you return it immediately, otherwise you consult the 70B. This requires a confidence estimation mechanism, but it’s a practical pattern to reduce usage of the expensive model. Similarly, ensembles of small models can sometimes outperform a larger model, especially if each is specialized – though running N models is usually slower, ensemble techniques like MoE (mentioned earlier) aim to get the benefit of specialization within one model’s framework.

- Retrieval-Augmented Generation (RAG) for Efficiency: We often think of RAG (Section 3.3) for accuracy, but it can also make inference cheaper. A smaller model with access to a relevant document from a vector database can produce a correct answer that might have required a much larger param model without retrieval. In other words, RAG lets you leverage external memory (search index) instead of heavy internal param count. This can allow using a 7B model where you might otherwise need a 70B to recall as much knowledge. Moreover, by grounding answers in retrieved text, the model can be more deterministic, potentially allowing more aggressive response truncation or simpler decoding (since less “creative” generation is needed, the model can copy relevant facts from context).

- Compilation and Low-Level Optimizations: There are emerging compiler frameworks (e.g. Apache TVM, ONNX Runtime, BladeDISC) that optimize the model execution graph for specific hardware. These can fuse operations, optimize memory access patterns, and sometimes give decent speedups without changing the model. For instance, running LLaMA-2 on ONNX Runtime with OpenVINO on CPU can outperform naive PyTorch by using oneDNN libraries under the hood. Similarly, NVIDIA TensorRT-LLM (released 2023) compiles transformer models to highly optimized GPU kernels, supporting quantization and multi-GPU distribution seamlessly. These tools are becoming more user-friendly and support many open models – e.g., Meta provides a conversion for Llama 2 to TensorRT. Using them is a best practice to squeeze out extra latency reductions.

- Pipeline and Parallelism: When a model is too large for one device, tensor parallelism and pipeline parallelism are used to split it across GPUs or nodes. While these don’t speed up single-token latency (they often slightly increase it due to communication), they enable serving larger models at all in on-prem setups. Recent improvements in GPU interconnects (NVLink, InfiniBand) and libraries (DeepSpeed, Megatron-LM) have reduced the overhead. This is more about feasibility and scalability than optimization, but it’s worth noting as part of deployment patterns.

- Hardware Advances: New specialized hardware can itself be an optimization. NVIDIA’s GH200 “Grace Hopper” has massive memory allowing huge models to sit in GPU memory. Emerging AI accelerators (Google TPU v5, Graphcore IPUs, etc.) promise faster transformer block operations. While our focus is software techniques, practitioners should keep an eye on hardware-specific optimizations (like using TensorRT-LLM on NVIDIA, or OpenVINO on Intel, etc., to leverage hardware features fully).

The landscape of LLM inference optimization is evolving rapidly. Efficiency has become a paramount focus as model sizes grow. Techniques often complement each other – for example, one might run a quantized 4-bit model and use speculative decoding, or prune a model and then apply FlashAttention during inference. The next section discusses how these optimizations are applied in practice across different deployment scenarios.

.png)